Introduction of the series

In the previous article (How to Design and Implement a Successful AI Strategy for your Business), we discussed why most Machine Learning projects would not go beyond the PoC phase and what to do with it from the strategy perspective.

It turned out that making AI work as an integral part of a real-world product or application is so much more than creating a Deep Neural Net or using Transfer Learning. And Proof of Concept is not the beginning nor the end of it.

Our goal in this series of articles is to build a real-world Production Machine Learning Application and deploy it to serve as an integral part of our app.

We will talk about:

- Production ML components,

- Production ML tools,

- Detaled examples of Production ML Pipelines (yes, not just one example),

- And some tools that help establish Machine Learning as a discipline in your company (and not just one or few pipelines).

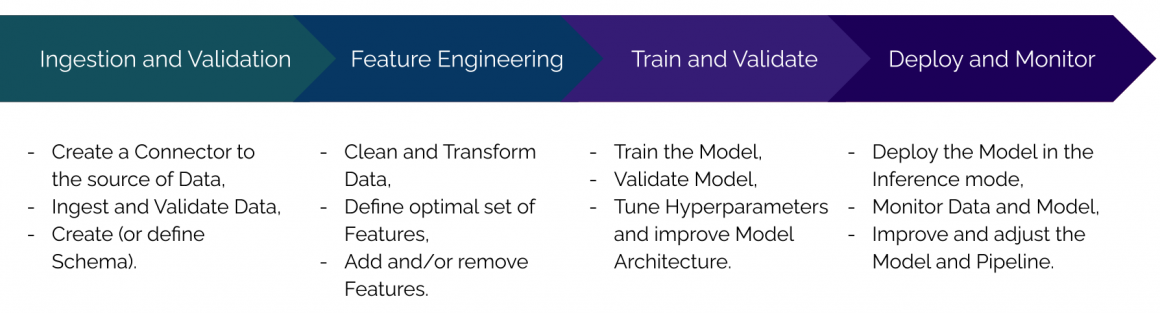

Production Machine Learning contains a few critical aspects: Data Ingestion and Validation, Feature Engineering, Model Training and Validation, and Deployment and Monitoring.

Ingestion and Validation

When we play with Model at a Proof of Concept phase, we often use good-quality datasets. This helps us focus on Model Architecture. And it makes sense because, at this stage, we’re trying to understand if there is a solution to our problem and how it might look like.

When working with good data, we can be 100% sure that it is primarily Features and Model Architecture that we can and should focus our attention on. In some cases, when we had no good data, we had to postpone work on the Model Architecture and Hyperparameters to get Data right first. And it almost always took a significant amount of time and effort.

Later, when we move on to the Production ML implementation, we face new challenges, part of which comes in the form of imperfect Data. Skewed, sparse, corrupted, missing data – there are many faces of this challenge, and all of them causing degradation of our Model performance.

At this aspect of Production ML Pipeline we will be using the following tools:

One of the most popular open-source libraries for Machine Learning developed and maintained by the Google Brain team.

We will use it extensively across all phases.

TFX is a platform that has all the necessary tools to build and run Production ML Pipelines. During Data Ingestion and Validation, we will be using the following library from TFX:

TF Data Validation

- TFDV ExampleGen component will help us ingest and splt data to train and test datasets.

- TFDV StatisticsGen will give us statictics for our datsets.

- TFDV SchemaGen generates Schema for our datasets. We can explicitly state Data Schema or generate it from Data. Schema describes features of our Dataset.

- TFDV ExampleValidator define anomalies and corrupted/missing datapoints.

Feature Engineering

Feature Engineering usually consists of two groups of activities:

- Cleaning, normalizing and transforming Data. In this phase we eliminate anomalies detected by TFDV ExampleValidator.

- Analysing Features, eliminating some Features, and adding new features.

Elimination of Features is an essential part of Production ML because it will significantly reduce the cost of infrastructure. It will also simplify gathering the necessary Data, which would reduce the budgets and time required to collect the required Data. Later could be significant expenses.

The instruments we will be using:

You probably are not surprised.

TF Transform

TFT provides a hand full of valuable components from preprocessing our data. It is very efficient, and with its help, we can split preprocessing across a pool of resources. TFT also generates Transformation Graph, which will ensure that Data is preprocessed the same way during both the Training and the Inference phases.

Open-source Python Machine Learning library.

Among many other very useful things, scikit-learn has components that will help us define the most relevant Features and git rid of those not driving value.

Apache Beam is a unified model used for ETL (Extract-Transform-Load) and Data processing. It can run its workflows on various runners, such as Flink and Spark (distributed processing systems that allow us to massively parallel work).

Train and Validate

At this stage of our pipeline DAG (Direct Acyclic Graph), we will finally Train our Model. Why do I use the DAG word? Because our TFX Pipeline is a Graph that has no loops (the definition of “Acyclic” part), direction (“Direct”) and consist of states (“Nodes” of the “Graph”) and transitions (“Edges”) from one state to another.

As a part of the training phase, we will launch a Hyperparameters Tunning algorithm that will help us find the combination of Hyperparameters that results in the best performance of our Model.

When the Model is trained, we will Validate its efficiency. And not just that, we also check if it can be deployed and perform inference because we want to spot problems with the deployment of our Model earlier before spinning up expensive infrastructure.

As always!

Keras Tunner is one of the leading hyperparameter tunning frameworks. It is open-source and free.

It will run a specific amount of cycles of training our Model and define the set of hyperparameters that results in the highest performance of our Model.

Deploy and monitor

This is the final part of our Production ML Pipeline where we place our trained and validated Model to the place where it will serve our users. Like all previous stages, it happens automatically.

Scalability and reliability are the two major concerns for us at this stage. We want to ensure that we can provide a reliable solution, and if something goes wrong with one instance of the Model, it will be immediately replaced by another.

Here again.

TFX InfraValidator

This component will validate if our Model can be deployed and served. It helps spot problems early before we spin up and configure runners and infrastructure.

TFX Pusher

Pusher receives confirmation of Model readiness from InfraValidator and pushes it to the required destination where it will be served. Pusher supports many possible destinations and is reasonably easy to configure and use.

Docker is a Platform as a Service product that allows us to deploy our Model packaged in Containers that have everything necessary inside for our Model to work and perform inference.

We have more information about Docker in this blog, so you might want to check out Tech Navigator.

Kubernetes is an open-source container orchestration platform. It helps us spin up and control fleets of containers, ensure that they are healthy, balance the workload among them, route requests, and many more.

Cases

To demonstrate Pipelines, we will work with three different Datasets:

- Unstructured data in a form of movie reviews;

- Structured data in a form of Census Income Dataset that is used to predict the level of income;

- Sequential data in a form of sequence of signals from sensors on IoT devices.

This will help you navigate through 80% of the cases you might face in your journey to Production Machine Learning.

So good luck to us, and off we go to the next station – Data Ingestion and Validation.

P.S.: The table below will help you navigate through the series of articles: