This article is part of a series describing Production ML – Production ML Series of Articles. We continue our review of model deployment options we’ve started in Production ML: Model Deployment and Serving Introduction.

Preparation

This time we will focus on deploying your model to the Virtual Machine in AWS EC2. There are a few things we want to consider when planning our deployment:

- Communication method: how our model will communicate with the rest of the application. In this article, we take the easy path and use plain REST API. But in your production-grade application, you might want to consider decoupled architecture based on message broker (Kafka being my favorite option today).

- Expected load: how many requests do you expect the model to be able to process within a minute/hour? The number of requests per time period will help you understand how far your API is expected to produce the result back. If your estimated load is two requests per hour, let’s say, and you use decoupled architecture, then you need a lot less computing power compared to the case of 2 requests per second or ten requests per second.

- Scaling mechanism: are you going to scale up or out? Scaling up means you will increase the power of one VM you deployed your model to. If you’re going to face a fair load and your model is expected to work 24/7, the recommended option would be to scale out, which is adding more VMs to your pool. In this case, in addition to the process described in this article, you will need to set up a booting script, VM scale set, and a Load Balancer for your scale set.

- Software updating and patching: which software will you need so your model can produce the result? Colab is an extremely stable and manageable environment. You can feel the difference when installing packages to AWS EC2 VM. It is very common that community images contain outdated software versions, and you will have to deal with Python 2 and Tensorflow 1.9 more often than you would expect. I recommend carefully planning the software you will be using and reducing the number of packages to only necessary. Try using the most common and easily installed versions among the necessary packages.

The task

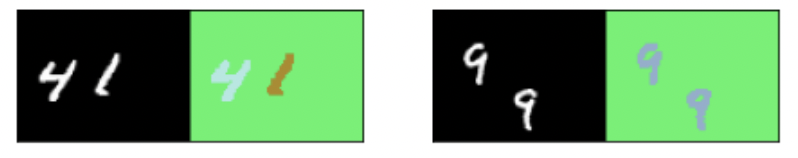

I wanted to demonstrate something interesting and visual in this article. It could be pretty boring to send 20 numbers to a model and receive one or a few classes. I wanted this to be more visual and less of an easy task. So I picked a visual semantic segmentation task where we will teach the model to segment numbers on the picture, like so:

On the left of the picture above, you see the black and white input image containing two numbers: one and six. On the right side of the image, you see the same image with the segmentation mask applied to it. Basically, the model tells apart different numbers on one picture. But it does so by color-coding each pixel into a specific color unique for each digit.

This model has many real-world implications. For example, it is used in vertical farming to define when a tomato is ready to be picked up. It can also be used in spotting defects or waste recycling belts.

The dataset

We will use the so-called M2NIST dataset to train our model. It is a version of the popular MNIST but with quite important enhancements. Each picture has up to three digits, and each pixel has a mask that identifies the pixel as belonging to a specific digit.

The dataset contains 5000 data points, and you can download it from this page:

If you follow this article, I would recommend you load/prepare the dataset and train your model in Colab.

First, as usual, we import some libraries:

import os

import zipfile

import PIL.Image, PIL.ImageFont, PIL.ImageDraw

import numpy as np

from matplotlib import pyplot as plt

import tensorflow as tf

import tensorflow_datasets as tfds

from sklearn.model_selection import train_test_splitLoad the zip file and extract it to your Colab execution environment:

#load archive from file-storage and save it to the local folder

!wget --no-check-certificate \

<put link to your file here> \

-O /tmp/m2nist.zip

#unpack the archive contents to /tmp folder of Colab env

local_zip = '/tmp/m2nist.zip'

zip_ref = zipfile.ZipFile(local_zip, 'r')

zip_ref.extractall('/tmp/training')

zip_ref.close()The good news is that this dataset comes in the format of NumPy files. Thus it is easier to load and prepare it. Basically, all data points are located in two .npy files:

This dataset can be easily preprocessed since it is available as Numpy Array Files (.npy):

- combined.npy has the image files containing the multiple MNIST digits. Each image is of size 64 x 84 (height x width, in pixels).

- segmented.npy has the corresponding segmentation masks. Each segmentation mask is also of size 64 x 84.

Let’s create a couple of helper functions to load and prepare data points:

BATCH_SIZE = 32

def read_image_and_annotation(image, annotation):

'''

Casts the image and annotation to their expected data type and

normalizes the input image so that each pixel is in the range [-1, 1]

Args:

image (numpy array) -- input image

annotation (numpy array) -- ground truth label map

Returns:

preprocessed image-annotation pair

'''

image = tf.cast(image, dtype=tf.float32)

image = tf.reshape(image, (image.shape[0], image.shape[1], 1,))

annotation = tf.cast(annotation, dtype=tf.int32)

image = image / 127.5

image -= 1

return image, annotation

def get_training_dataset(images, annos):

'''

Prepares shuffled batches of the training set.

Args:

images (list of strings) -- paths to each image file in the train set

annos (list of strings) -- paths to each label map in the train set

Returns:

tf Dataset containing the preprocessed train set

'''

training_dataset = tf.data.Dataset.from_tensor_slices((images, annos))

training_dataset = training_dataset.map(read_image_and_annotation)

training_dataset = training_dataset.shuffle(512, reshuffle_each_iteration=True)

training_dataset = training_dataset.batch(BATCH_SIZE)

training_dataset = training_dataset.repeat()

training_dataset = training_dataset.prefetch(-1)

return training_dataset

def get_validation_dataset(images, annos):

'''

Prepares batches of the validation set.

Args:

images (list of strings) -- paths to each image file in the val set

annos (list of strings) -- paths to each label map in the val set

Returns:

tf Dataset containing the preprocessed validation set

'''

validation_dataset = tf.data.Dataset.from_tensor_slices((images, annos))

validation_dataset = validation_dataset.map(read_image_and_annotation)

validation_dataset = validation_dataset.batch(BATCH_SIZE)

validation_dataset = validation_dataset.repeat()

return validation_dataset

def get_test_dataset(images, annos):

'''

Prepares batches of the test set.

Args:

images (list of strings) -- paths to each image file in the test set

annos (list of strings) -- paths to each label map in the test set

Returns:

tf Dataset containing the preprocessed validation set

'''

test_dataset = tf.data.Dataset.from_tensor_slices((images, annos))

test_dataset = test_dataset.map(read_image_and_annotation)

test_dataset = test_dataset.batch(BATCH_SIZE, drop_remainder=True)

return test_dataset

def load_images_and_segments():

'''

Loads the images and segments as numpy arrays from npy files

and makes splits for training, validation and test datasets.

Returns:

3 tuples containing the train, val, and test splits

'''

#Loads images and segmentation masks.

images = np.load('/tmp/training/combined.npy')

segments = np.load('/tmp/training/segmented.npy')

#Makes training, validation, test splits from loaded images and segmentation masks.

train_images, val_images, train_annos, val_annos = train_test_split(images, segments, test_size=0.2, shuffle=True)

val_images, test_images, val_annos, test_annos = train_test_split(val_images, val_annos, test_size=0.2, shuffle=True)

return (train_images, train_annos), (val_images, val_annos), (test_images, test_annos)Now we can use the helper functions to load our dataset and prepare it for the training:

# Load Dataset

train_slices, val_slices, test_slices = load_images_and_segments()

# Create training, validation, test datasets.

training_dataset = get_training_dataset(train_slices[0], train_slices[1])

validation_dataset = get_validation_dataset(val_slices[0], val_slices[1])

test_dataset = get_test_dataset(test_slices[0], test_slices[1])The model

Our image segmentation model will consist of two parts:

- Downsampling: Convolutional Neural Network that extracts features from the image. This part of the model’s output is the reduced image consisting of features extracted from the input image.

- Upsampling: FCN-8 – Fully Connected Neural Network that takes features from the Downsampling part and recreates the Input image. The number eight stands for the eight-time increase in the size of the input image.

We would not focus too much on the model architecture now, as our primary focus would be the deployment part. But you will be able to see the architecture of both parts in the code, so let’s move on.

The downsampling part of the model:

# parameter describing where the channel dimension is found in our dataset

IMAGE_ORDERING = 'channels_last'

def conv_block(input, filters, strides, pooling_size, pool_strides):

'''

Args:

input (tensor) -- batch of images or features

filters (int) -- number of filters of the Conv2D layers

strides (int) -- strides setting of the Conv2D layers

pooling_size (int) -- pooling size of the MaxPooling2D layers

pool_strides (int) -- strides setting of the MaxPooling2D layers

Returns:

(tensor) max pooled and batch-normalized features of the input

'''

### START CODE HERE ###

# use the functional syntax to stack the layers as shown in the diagram above

x = tf.keras.layers.Conv2D(filters, strides, padding='same', data_format=IMAGE_ORDERING)(input)

x = tf.keras.layers.LeakyReLU()(x)

x = tf.keras.layers.Conv2D(filters, strides, padding='same')(x)

x = tf.keras.layers.LeakyReLU()(x)

x = tf.keras.layers.MaxPooling2D()(x)

x = tf.keras.layers.BatchNormalization()(x)

### END CODE HERE ###

return xPlease continue reading the second part of the article: