In this article, we continue looking into the implementation of LLM-based agents. Please see Production LLM: how to harness the power of LLM in real-life business cases. to get more background and description of the series.

The purpose

So far, we’ve implemented Agents that use vector and SQL databases to answer user requests. Both have their use cases and pros and cons. But what if we need more? What if our user can ask for both articles and products? And also something else?

In such cases, none of the Agents would work. We need something a bit more robust.

Let’s imagine that we have a shop selling hiking products. We want to recommend articles for users to read and recommend products mentioned or relevant to the articles we’ve recommended. Also, it would be cool to help them know the weather forecast so they can plan their trips, read some hiking tips, and buy something they need.

In this case, we need the Agent to be able to use multiple tools to answer user queries.

And this is precisely what we are going to create now.

Before you read further, please make sure to read and understand the previous two articles:

We will use both of the Agents in the current article.

Elements

I hope you read the articles above and are all set to implement Agent with tools.

For Agent with tools, we need Agent and tools. Sounds not too complicated, right? 🙂

Most popular frameworks, like LangChain of LlamaIndex, provide their API to create and use Agents with tools. For the sake of coherence, we will continue using LangChain for now.

There is a particular constructor in LangChain that we will call and ask to initialize Agent with tools. We will pass the list of tools and LLM of our choice to the constructor, and it will initialize Agent for us.

Each tool will be a function that receives a query from LLM and provides output. Each function will be decorated in a special way. Let’s take a look at the example below.

Implementation

First, let’s ensure we can see the Agent’s output well.

from IPython.display import HTML, display

def set_css():

display(HTML('''

<style>

pre {

white-space: pre-wrap;

}

</style>

'''))

get_ipython().events.register('pre_run_cell', set_css)Now, we can install LangChain, Pinecode, and BigQuery SDKs.

!pip install --quiet langchain-openai tiktoken langchain langchain-community langchain-experimental sqlalchemy-bigquery google-cloud-bigquery pinecone-clientAnd prepare our credentials:

import json

import os

CHAT_GPT_API_KEY = <OpenAI API key>

CHAT_GPT_ORG = <OpenAI API organization>

model_name = 'gpt-4'

BQ_SERVICE_ACCOUNT_CREDENTIALS = <Your BQ service account credentials>

project_name = <GCP project name>

db_name = <name of BQ database with products>

service_account_file = "credentials_bg.json"

if os.path.exists(service_account_file):

print('File is already there')

else:

with open(service_account_file, "w") as outfile:

outfile.write(json.dumps(BQ_SERVICE_ACCOUNT_CREDENTIALS))

PINECONE_API_KEY = <Pinecone API key>

index_name = 'blog-articles'

Our Agent will use four tools in this example:

- Products recommender: the Agent connected to the BigQuery dataset with the list of products from the article Production LLM: SQL Agent.

- Articles recommender: the Agent connected to the Pinecone vector database containing articles from this blog (Production LLM: Vector Retriever)

- Math function to perform exponentiation,

- Math function to perform the addition of two numbers.

The code below should be familiar to you from the SQL Agent article.

from langchain.agents import create_sql_agent

from langchain_community.agent_toolkits import SQLDatabaseToolkit

from langchain_community.utilities.sql_database import SQLDatabase

from langchain_community.chat_models import ChatOpenAI as LangChainChatOpenAI

from langchain.agents.agent_types import AgentType

from langchain.prompts.prompt import PromptTemplate

def get_recommendation(query):

path_to_sa_file = f'/content/{service_account_file}'

sqlalchemy_url = f'bigquery://{project_name}/{db_name}?credentials_path={path_to_sa_file}'

db = SQLDatabase.from_uri(sqlalchemy_url)

max_output_tokens = 1024

temperature = 0.2

top_p = 0.95

frequency_penalty = 1

presence_penalty = 1

get_recommendation_llm = LangChainChatOpenAI(

openai_api_key = CHAT_GPT_API_KEY,

openai_organization = CHAT_GPT_ORG,

model = model_name,

max_tokens = int(max_output_tokens),

temperature = float(temperature),

top_p = float(top_p),

frequency_penalty = float(frequency_penalty),

presence_penalty = float(presence_penalty)

)

sql_toolkit = SQLDatabaseToolkit(db = db, llm = llm)

agent_executor = create_sql_agent(

llm = get_recommendation_llm,

toolkit = sql_toolkit,

verbose = True,

max_iterations = 20

)

context = '''

You are a helpful assistant who should recommend products for the user.

You will find the list of products in the dataset you were provided with.

You will find discount number in Discount column.

Listing Price column contains price of the product without discount.

Number of reviews are in the Reviews column.

When asked to recommend product, provide product name from Product Name column, price from Sale Price column and rating from the Rating column of each product.

Based on Description column write a short description for each product you recommend. Make it interesting and engaging.

If query returned no results try to relax the conditions and search for semantically similar items or items of a wider/higher category.

Repeat search as many times as you need to return results. Each time use semantically similar or wider search request terms.

'''

# request = query

result = agent_executor.run(context + query)

return resultThe following Agent was described in the vector Agent article.

from langchain.chat_models import ChatOpenAI as LangChainChatOpenAI

from langchain.chains import RetrievalQA

from langchain_community.vectorstores import Pinecone as LangChainPinecone

from langchain_openai import OpenAIEmbeddings

import getpass

import os

def get_article(query):

os.environ["PINECONE_API_KEY"] = PINECONE_API_KEY

max_output_tokens = 1024

temperature = 0.2

top_p = 0.95

frequency_penalty = 1

presence_penalty = 1

embeddings = OpenAIEmbeddings(

api_key = CHAT_GPT_API_KEY,

organization = CHAT_GPT_ORG

)

get_article_llm = LangChainChatOpenAI(

openai_api_key = CHAT_GPT_API_KEY,

openai_organization = CHAT_GPT_ORG,

model = model_name,

max_tokens = int(max_output_tokens),

temperature = float(temperature),

top_p = float(top_p),

frequency_penalty = float(frequency_penalty),

presence_penalty = float(presence_penalty)

)

doc_db = LangChainPinecone.from_existing_index(index_name = index_name, embedding = embeddings)

qa = RetrievalQA.from_chain_type(

llm = get_article_llm,

chain_type = 'stuff',

retriever = doc_db.as_retriever(search_type="mmr", search_kwargs={'k': 3, 'fetch_k': 5})

)

context = '''

You are helpful assistant in the blog of Alex Ostrovskyy.

You can only answer questions about the materials of blog.

Answer only based on the data you were provided with.

Provide Article link from the data you are provided with.

'''

result = qa.invoke(context + query)

return result['result']

Now, we can package both Agents as tools. To do this, we will use a special decorator from LangChain.

It will take the tool function and wrap it with another function, which will get the query generated by LLM (our tool-calling Agent uses LLM to generate such queries), pass it to the function, and pass the response back to the tool-calling Agent.

It will help connect the tool to the tool-calling Agent.

from langchain_openai import ChatOpenAI as LangChainChatOpenAI

from langchain_core.tools import tool

# tool that will help calculate exponent

@tool

def exponentiate(x: float, y: float) -> float:

"""Raise 'x' to the 'y'."""

return x**y

# tool that will add two numbers

@tool

def add_func(x: float, y: float) -> float:

"""Adds 'x' to 'y'."""

return x + y

# product recommendation tool

@tool

def recommend_product(query: str) -> float:

"""Recommends products relevant for user request"""

# print('query: ', query)

return get_recommendation(query)

# articles recommendation tool

@tool

def recommend_article(query: str) -> float:

"""Recommends articles relevant for user request"""

# print('query: ', query)

return get_article(query)

The tool-calling Agent will decide when to use each tool based on a user’s request. The logic it will use will be pretty straightforward. And if we want to describe more complex logic or pre-define sequence in which Agent has to use the tools, we have two options:

- enhance the prompt of the Agent,

- use a graph to describe the sequence of calls and relationships.

We will review the graphs in the following article; we will not change the prompt for now. If you want to see how it is done, please check the previous article – Production LLM: SQL Agent.

You would be surprised by how simple it is to construct a tool-calling Agent now. Please see the code below.

from langchain_core.prompts import ChatPromptTemplate

from langchain.agents import create_tool_calling_agent, AgentExecutor

# initialize OpenAI LLM model

llm = LangChainChatOpenAI(

openai_api_key = CHAT_GPT_API_KEY,

openai_organization = CHAT_GPT_ORG,

model = model_name,

max_tokens = int(max_output_tokens),

temperature = float(temperature),

)

# here you can checnge prompt

prompt = ChatPromptTemplate.from_messages([

("system", "you're a helpful assistant"),

("human", "{input}"),

("placeholder", "{agent_scratchpad}"),

])

# simply add all tools into the list

tools = [exponentiate, add_func, recommend_product, recommend_article]

# construct agent with tools

agent = create_tool_calling_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent = agent, tools = tools, verbose = True)

# and test our tool-calling Agent on fairly complex request which will require Agent to use all the tools

result = agent_executor.stream({"input": "I need shoes for hiking with good discount. Do you have any articles about LLMs? Also, what's 3 plus 5 raised to the 2.743?", })

output = ''

for res in result:

output += res['messages'][0].content

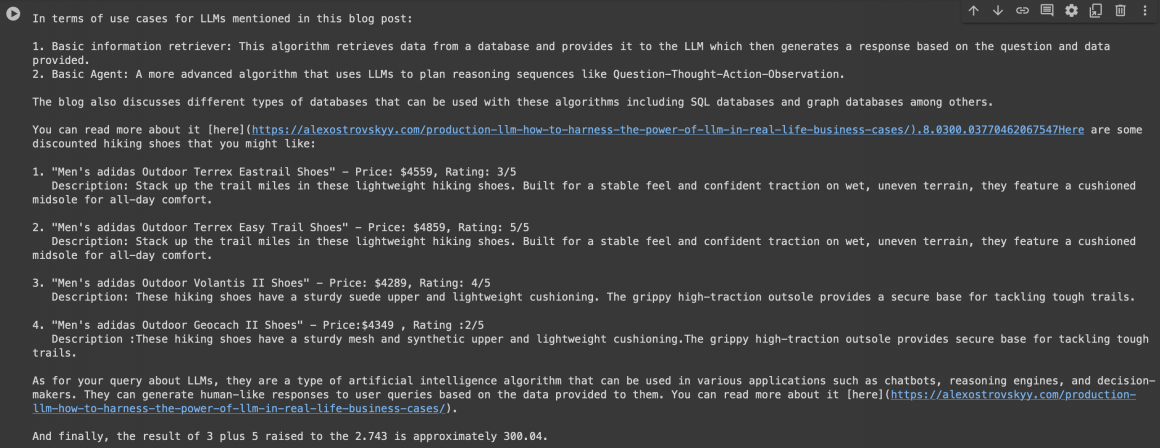

print(output)The output for me looks like the following:

As you can see, the Agent has used all the tools to solve our puzzle. Impressive, right?

Improvements

The Agent with tools is great and can cover quite complicated use cases. But it cannot do one thing out of the box — use the output of one tool to give it to another.

Of course, we can combine multiple tools under one function and turn this function into a tool. Inside this combined tool, we can stack tools one on top of another and create the sequence.

But what if it is not linear and not always the output of one tool should go to another tool as input? It could be that depending on the output, we would want to make a decision about the next step.

In such a case, we must use another solution – Multi-Agent RAG Graph.

So, if everything about multi-tool Agents is clear to you, you can go ahead and build the Multi-Agent RAG Graph in the following article!

See you in the next article!

Disclaimer

The content provided in this blog is for informational and demonstration purposes only. The ideas, code samples, and proof of concept solutions presented are intended to illustrate potential approaches and should not be taken as complete, production-ready implementations. While efforts have been made to ensure accuracy and clarity, these examples may lack comprehensive security, reliability, or optimization measures necessary for deployment in a production environment.

Readers are encouraged to conduct their own research and testing if they intend to implement similar solutions in real-world applications. It is recommended to consult with a qualified professional or a software architect to adapt any solution to fit the specific requirements and constraints of their project.

This blog assumes no responsibility or liability for any errors or omissions and disclaims any liability for any actions taken based on the material presented here.