Key Takeaways

- From Chatbots to Agentic AI: The 2026 roadmap moves beyond simple text generation to Agentic AI—systems that can autonomously reason, plan, and execute complex tasks like claims processing and code refactoring.

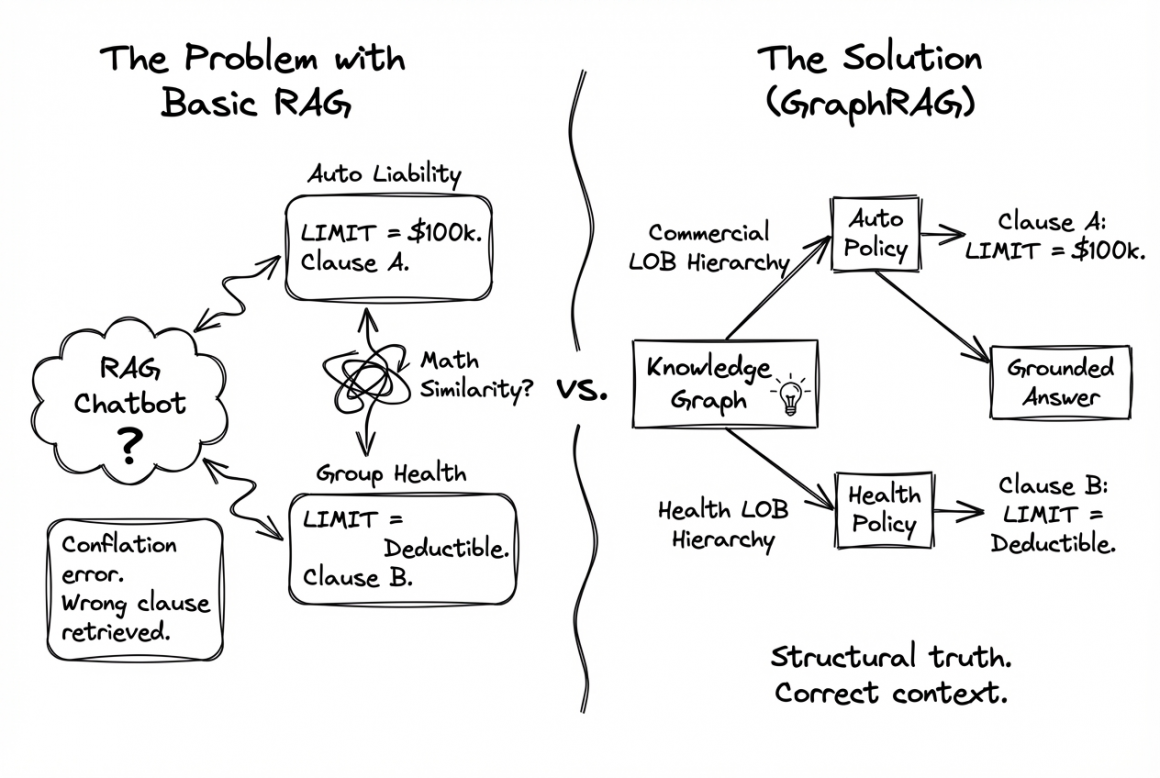

- RAG Evolved into GraphRAG: Standard RAG (Retrieval-Augmented Generation) struggles with complex reasoning. The future is GraphRAG, which uses Knowledge Graphs to understand the relationships between policies, ensuring accurate, truth-grounded answers.

- The “TrustOS” Firewall: Governance is no longer manual. A centralized “TrustOS” acts as a firewall for all AI models, ensuring compliance and data safety before an agent ever takes action.

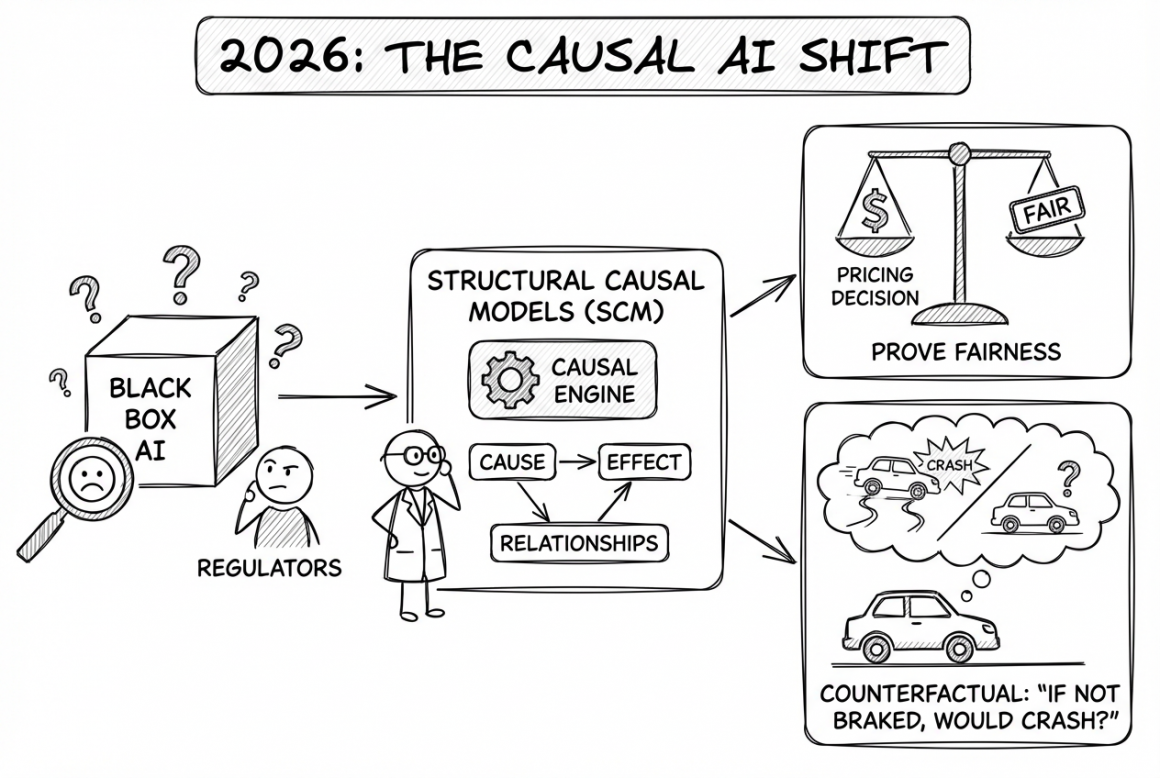

- Causal AI for Reasoning: To make Agentic AI safe, we incorporate Causal AI. This allows the system to understand cause-and-effect (the “why” behind a risk), satisfying regulatory requirements for explainability.

- Cost-Effective Synthetic Data: Instead of buying expensive datasets, a Synthetic Data Foundry generates privacy-safe data to train models, reducing costs and eliminating PII risks.

As we close out 2025, it is a good time to reflect on the state of AI in our industry. If you are a modern insurance carrier, chances are you have successfully played the first round of the GenAI game. You’ve likely built the “Corporate Chatbot.” You connected a Large Language Model (LLM) to internal documents using RAG (Retrieval-Augmented Generation), gave it a sleek interface, and enabled your employees to search through mountains of PDF policies and wikis using natural language.

It was a necessary first step. But there is so much more you can do.

As we look toward 2026, the question every leader and architect must ask is: How do we move from AI that just “talks” to Agentic AI that “does work”? How do we evolve from systems that summarize text to systems that can autonomously process a claim, price a complex risk, or ensure regulatory compliance without manual boring work?

The 2026 roadmap isn’t just about “more models.” It is about Agentic AI, Reasoning, and Governance.

Phase 1: The Foundation – Building the “Trusted Cortex”

Before we let Agentic AI take actions, we must ensure it understands the world correctly (Truth) and plays by the rules (Governance). We cannot build faster vehicles without better brakes.

1. TrustOS: The Centralized AI Firewall

Currently, AI governance is often a manual process scattered across Legal and Tech. In 2026 we need a centralized “TrustOS.”

Think of this as an architectural firewall that sits between your users and your agents and models. It performs code checks, real-time PII redaction, bias detection, and “hallucination checks” before a model ever receives a prompt. It transforms “Responsible AI” from a policy document into an automated software gatekeeper.

2. GraphRAG: From Standard RAG to Relationships

Now, most of the Chatbots use standard RAG based on “Vector Search,” which finds similar words. However, in insurance, similar words can have vastly different meanings depending on the context.

- The Problem with Basic RAG: The word “Limit” means one thing in Personal Auto Liability and something completely different in CGL. A standard RAG chatbot might conflate these, retrieving a CGL policy clause to answer an Auto question simply because the words look mathematically similar.

- The Solution (GraphRAG): By backing our AI with a Knowledge Graph, the system understands the relationship between data points. It knows that Clause A belongs strictly to the Commercial LOB hierarchy. It grounds answers in the structural truth of the business, superior to what standard RAG can offer.

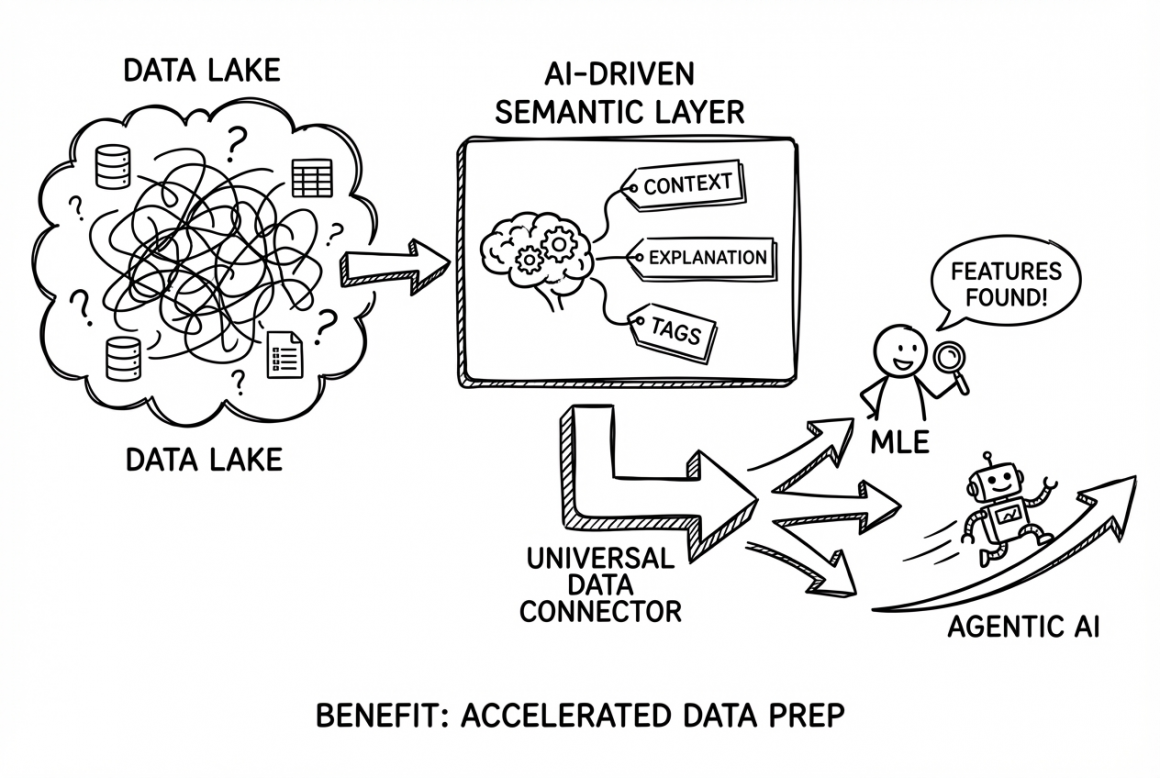

3. Active Metadata “Semantic Layer” (The Universal Connector)

As our data lakes grow, finding the right data becomes a nightmare. We need an AI-driven Semantic Layer. This system automatically tags and explains data context, acting as a Universal Data Connector.

- The Benefit: When an MLE needs features to build a new model, they don’t have to hunt through messy SQL tables. The Semantic Layer guides them, drastically accelerating the data preparation for Agentic AI workflows.

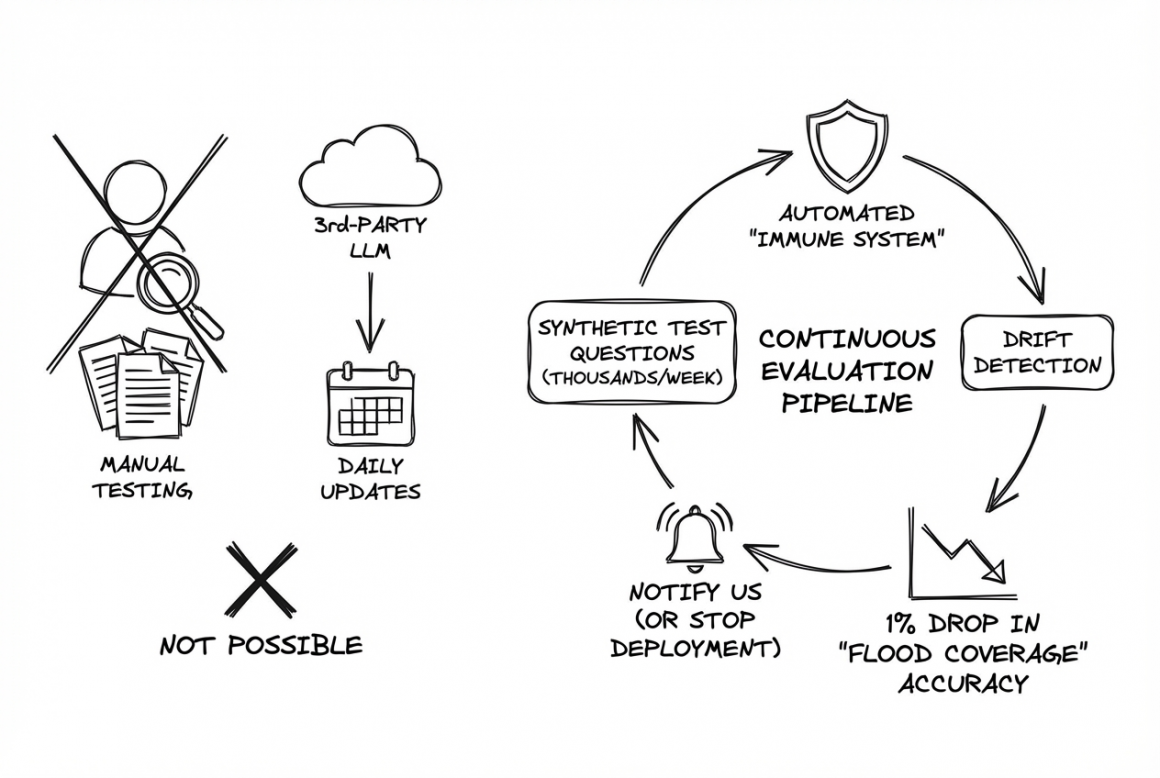

4. LLMOps 2.0: The Immune System

We cannot manually test 3rd-party LLMs that are updated daily. We need an automated “immune system”—a continuous evaluation pipeline. Every week, this system can test thousands of synthetic test questions. If an LLM update causes a 1% drop in accuracy for “Flood Coverage,” the system detects the drift and notifies us.

Phase 2: The Reasoning Core – “The Scientist and The Judge”

This is the most critical architectural shift for 2026. Current LLMs are great at language but poor at logic. To build a “Cognitive Insurer,” we need a brain that can actually reason.

5. The “Scientist”: Causal AI Risk Engine

Current Machine Learning finds correlations (e.g., “People who drive red cars have more accidents”). It doesn’t understand why.

In 2026, we can move toward Structural Causal Models (SCM). This “Causal Engine” acts as a scientist, identifying cause-and-effect relationships.

- Why it matters: Regulators are cracking down on “Black Box” AI. Causal AI allows us to prove that a pricing decision was fair. It allows us to answer: “If this driver hadn’t braked hard, would they still have crashed?” ans save this to our knowledgebase. This moves us from statistical guessing to causal reproducible proof, a requirement for trustworthy Agentic AI.

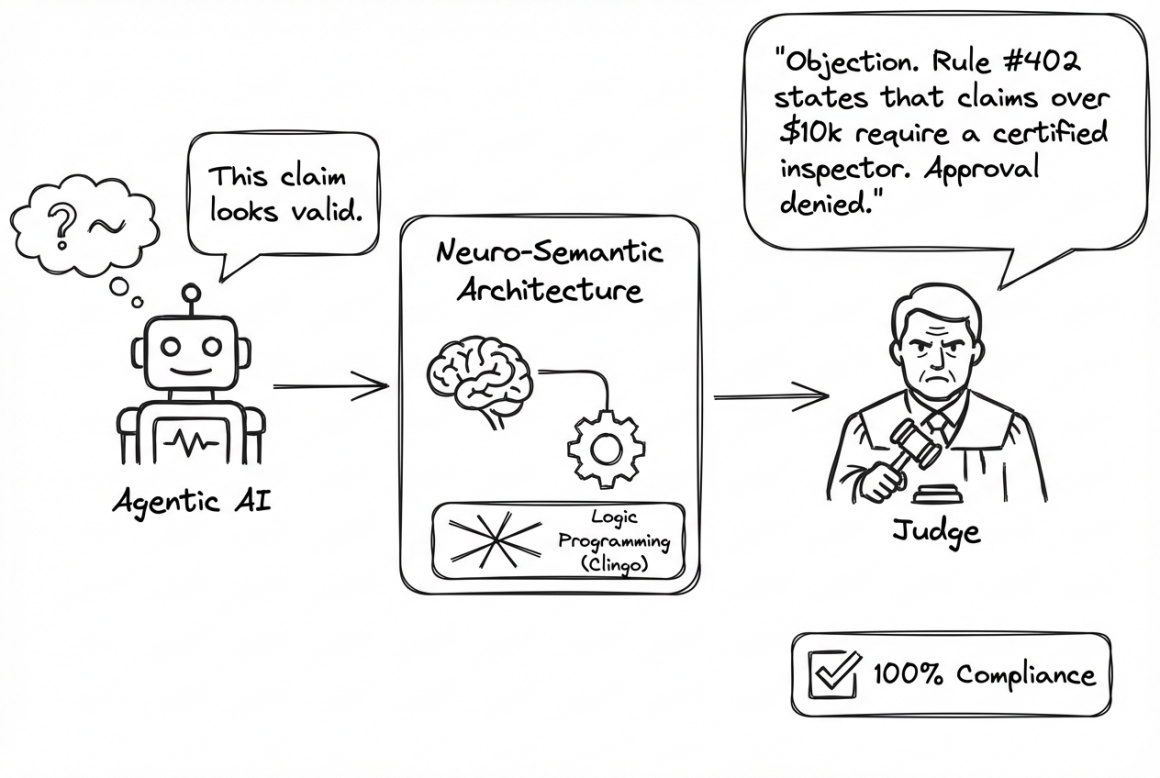

6. The “Judge”: Neuro-Semantic Knowledge Base

While the “Scientist” calculates risk, we need a “Judge” to enforce the law. We cannot allow Agentic AI to hallucinate a claim fraud referral that violates our knowledge.

I propose a Neuro-Semantic Architecture. This combines the flexibility of modern AI with the strictness of logic programming (using tools like Clingo).

- How it works: The AI might suggest, “This claim looks valid.” But the Neuro-Semantic core interjects: “Objection. Rule #402 states that claims over $10k require a certified inspector….” This ensures 100% compliance with hard business rules and knowledge.

Phase 3: The Workforce – The Rise of “Role-Based Assistants”

Once the foundation and reasoning core are set, we can deploy Agentic AI to empower our internal teams. Note that these are Assistants, not replacements—designed to handle the drudgery so humans can handle the decisions.

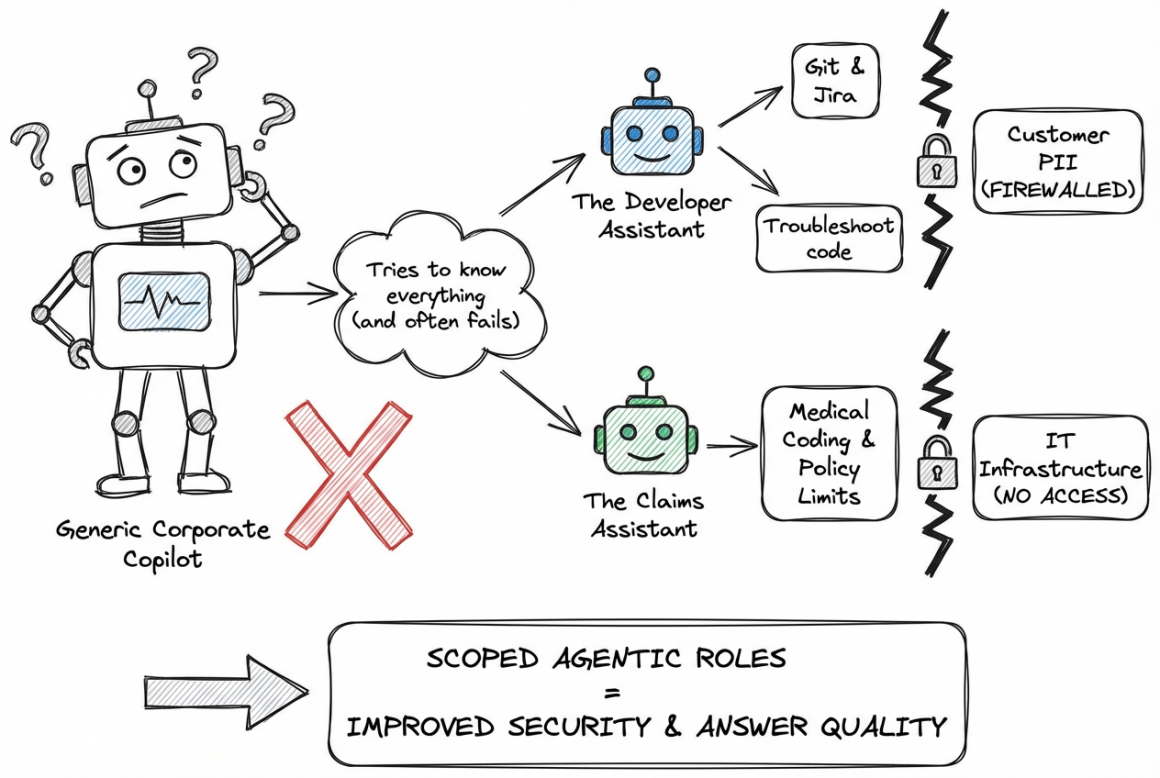

7. Role-Based Assistants

Instead of a generic “Corporate Copilot” that tries to answer everything (and often fails), we can deploy specialized Assistants.

For example:

- The Developer Assistant: Has deep access to Git and Jira. It can load the entire repository and policies, troubleshoot code and infrastructure, but is strictly firewalled from customer PII and data.

- The Claims Assistant: specialized, with access to knowledge, but has no access to the code or IT infrastructure.

By scoping these Agentic assistants to specific roles, we improve security and answer quality simultaneously.

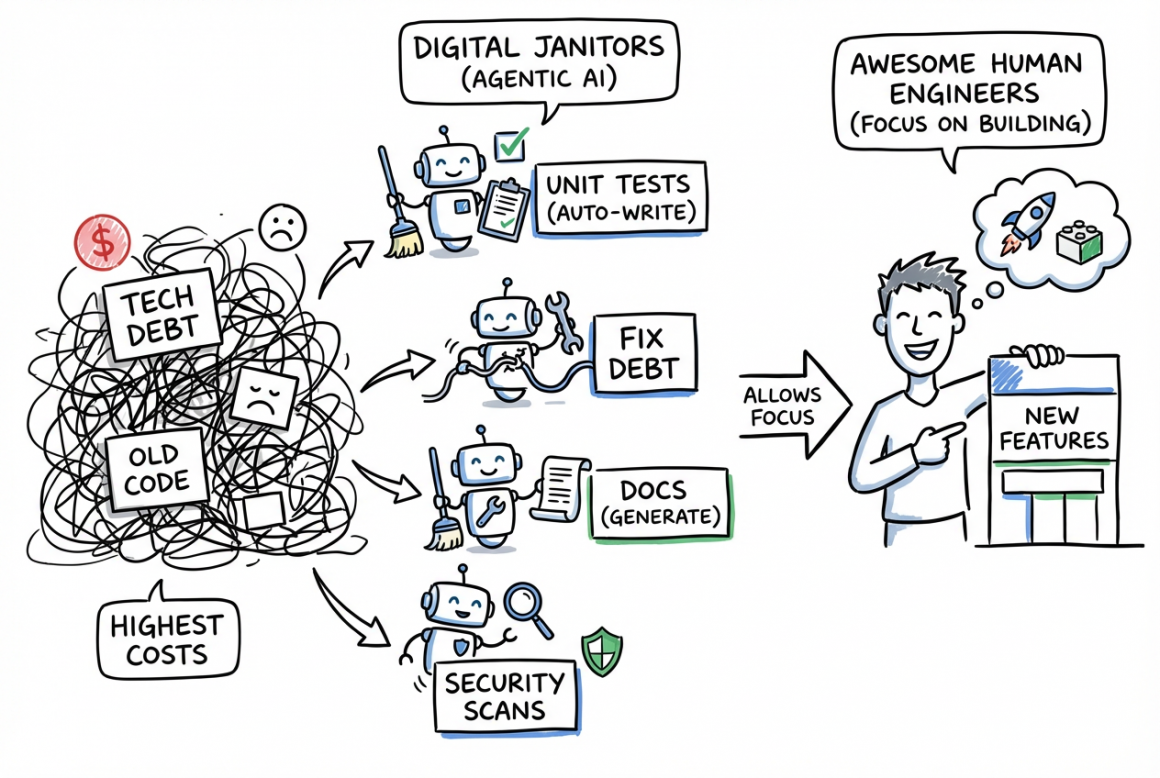

8. The Autonomous Software Factory

One of the highest costs is usually technical debt. We can deploy Agentic AI into our software pipelines to act as “Digital Janitors.” They autonomously write unit tests, fix tech debt, generate documentation for legacy code, and perform security scans. This allows our awesome human engineers to focus on building new features rather than fixing old ones.

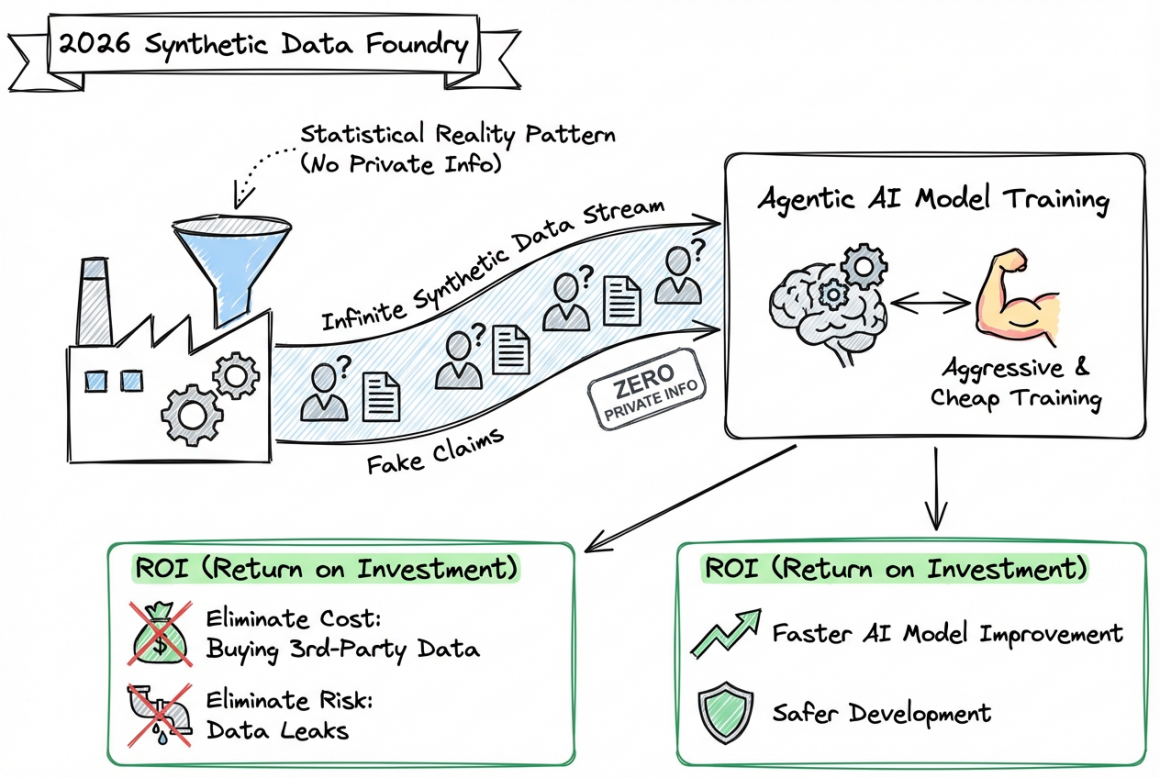

9. Synthetic Data Foundry

Data is expensive. Buying external datasets is costly, and cleaning internal data to remove PII is slow and risky.

In 2026, we can utilize a Synthetic Data Foundry. This system generates millions of semantic data points (customer profiles, claim scenarios, etc.) that are statistically identical to reality but contain zero real private information.

- The ROI: We eliminate the cost of buying third-party training data and the risk of data leaks. We can train our models aggressively and cheaply on infinite streams of synthetic data.

Phase 4: The Experience – Invisible & Empathetic

Finally, how does this touch the customer? By this phase AI should be “Invisible” yet “Omnipresent,” resolving issues before the customer even feels the friction.

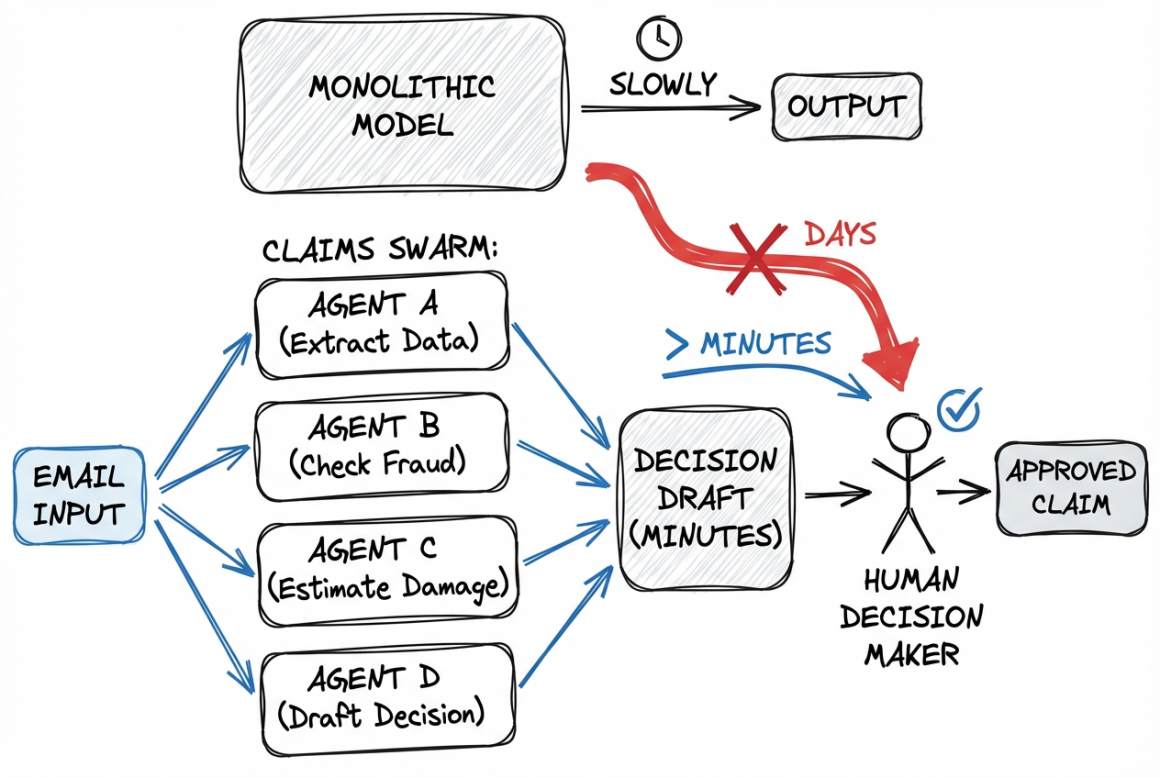

10. Multi-Agent Claims Swarm

Monolithic models are too slow. Imagine a Claims Swarm:

- Agent A extracts data from the email.

- Agent B checks the fraud graph.

- Agent C estimates damage from photos.

- Agent D drafts the decision.

These Agentic AI units work in parallel, reducing claim cycle times from days to minutes. And this is just one example of the Multi-Agent applications power.

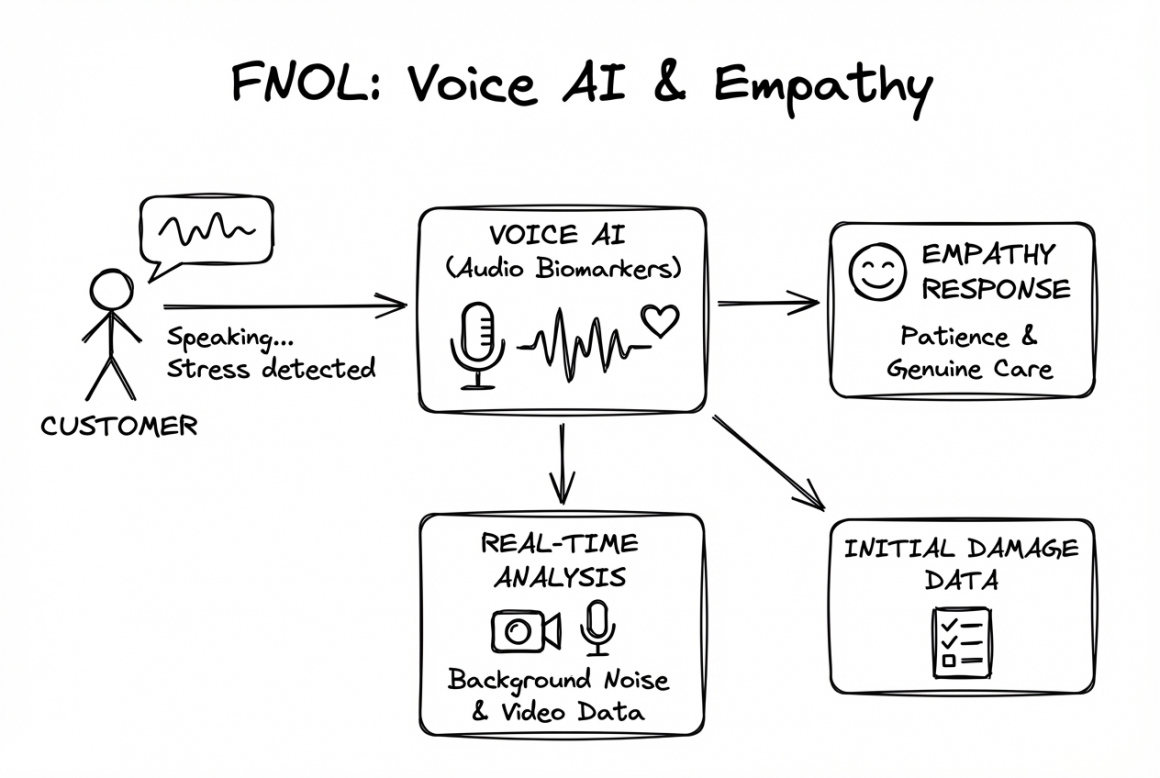

11. Multimodal & Empathetic Interfaces

For First Notice of Loss (FNOL), customers might not need speak to a robotic menu. They could speak to a Voice AI trained on audio biomarkers. It detects stress and responds with genuine empathy and patience, while simultaneously gathering initial information, analyzing background noise or uploaded video footage to assess damage instantly.

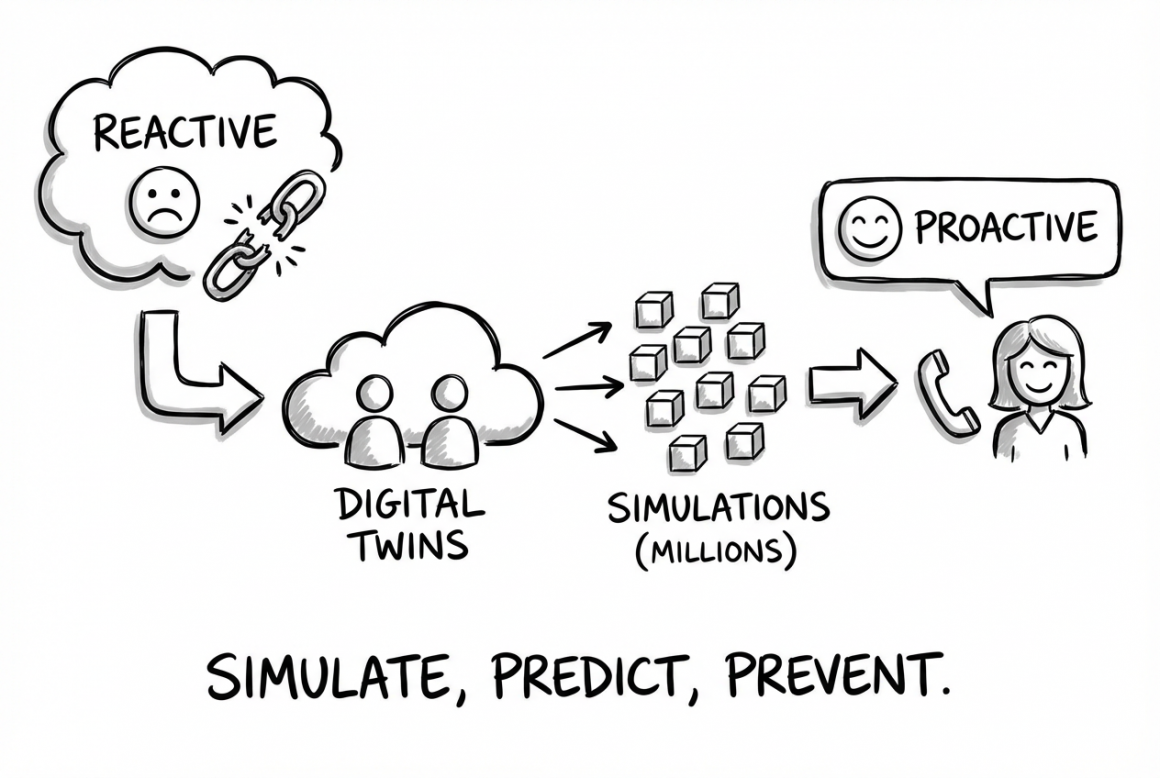

12. 360° “Digital Twin” Simulator

Instead of reacting to losses, we could simulate them. By creating “Digital Twins” of customer profiles, we can run millions of simulations to predict life events. This would allow us to contact and work with a customer before a problem occurs.

The Strategic Sequence

Here is how the phases in a roadmap could look like (please note: it might be > 1 year):

- Governance First: TrustOS and GraphRAG. If we cannot trust the AI’s data and security, we cannot scale.

- Reasoning Second: Causal & Neuro-Semantic core. We need the “Brain” to be logical before we give it hands.

- Agency Third: Only when the system is safe and logical do we unleash the Agentic AI swarms and assistants to execute tasks.

The “Chatbot Era” was about accessing information via basic RAG. The “Agentic AI Era” is about applying intelligent automation.

I hope this helps!

Frequently Asked Questions

What is the difference between GenAI and Agentic AI?

Generative AI (GenAI) focuses on creating content, such as text or images. Agentic AI takes this a step further by having the ability to “reason” and “act.” An Agentic system doesn’t just write an email; it can look up a policy, verify a claim, and send the email autonomously within a governed framework.

Why is standard RAG not enough for insurance?

Standard RAG (Retrieval-Augmented Generation) uses vector search to find similar words. In insurance, context is everything—”limits” mean different things in different policies. We need GraphRAG, which understands the relationships between data points, to provide accurate answers that standard RAG often misses.

How does Causal AI improve AI governance?

Most AI models are black boxes that find correlations. Causal AI identifies cause-and-effect relationships. This is crucial for Agentic AI in insurance because it allows companies to prove to regulators that a pricing decision was based on fair logic, not biased correlations.

Are Role-Based Assistants secure?

Yes. Unlike a generic “Copilot,” Role-Based Assistants in an Agentic AI architecture are designed with specific permissions. A “Developer Assistant” has no access to claims data, and a “Claims Assistant” cannot access server logs, ensuring strict internal security.