Why only 13%?

Every year we hear about new exciting breakthrough innovations in Machine Learning accomplished by teams at Google or OpenAI, Amazon or Microsoft, and Academia. We realize that Machine Learning bears enormous potential. Undoubtedly, Machine Learning is a disrupting factor in the modern economy. This is why many companies are keen to use ML to enhance their products and processes. They invest billions of dollars in total, but only very few are getting the Return on Investments they envisioned initially. Various researchers defined that during the last five to seven years, the percentage of AI projects successfully implemented in production is no more than 10-15% (see, for example, VentureBeat).

This article will discuss why this could happen and how to build a successful AI strategy for your business.

This article will discuss general concepts at the business strategy level. In subsequent articles, we will discuss particular pieces of the puzzle in all their details and technicalities.

First of all, we should start with something that is (in my opinion) one of the main reasons for failure when implementing AI. Companies (led by consultants) often start from the data they have. Indeed, it is a shiny promise: “We can turn the piles of data that you have into gold.” – consultants said. “Yup… This is something I can sell my boss. Show me your deck!”. I encourage you to avoid this path. Because it (in my experience) almost always ended the same way: a bunch of PoCs, $2-3 m spent, and consultants moving to the next client with this customer added to the slide with Our Customers.

What is wrong with this approach?

Data will be essential but on its own time. Initially, we should not focus on what we have but on where we want to be in 5-10 years. This is a much more important question. Because our data is the result of our past decisions, it is not designed to fit our strategy in the AI world. It can drive value, and later, we will talk about how to make it work. But it is not something we should start with.

If not data, then what?

The main goal of many established businesses is to stay ahead of competitors. Well, that is ideal. At least remain competitive and keep their market. For startups, things are a bit more complicated – they have to get the necessary traction, win their market share from others, or create a new market/segment. The common theme in both cases is customer – we must win customers by providing the best possible product/service/experience.

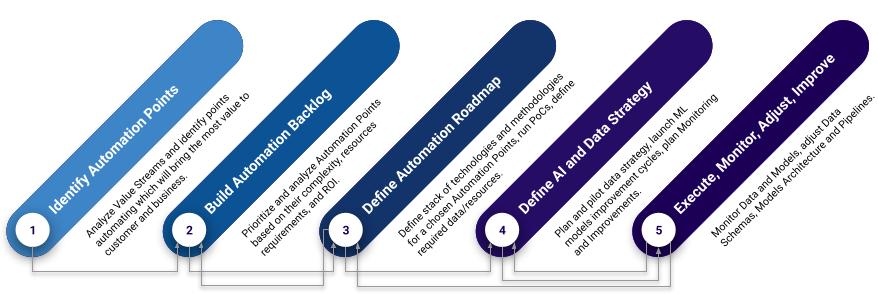

This is where we start – from analyzing Value Streams. We’ve discussed this topic in the article about Cloud Digital Strategy. And I think I will dedicate a separate article to this topic. So, for the sake of your time, I’ll not dive into details now. Here, we will focus on a helicopter view.

In general, we can group all activities into five related groups like below:

Automation Points

When we analyze Value Streams, we prioritize areas based on their contribution to our customers’ perceived value. This gives us an understanding of where we should start looking at our Automation Potential. It sounds a bit spooky, but it is a process or a group of processes we can and should automate in plain English. Here, we are talking about general automation. It’s not yet Machine Learning or anything specific.

Usually, I would recommend looking at:

- processes where we have many people making a bunch of typical decisions regularly;

- processes where people become a bottleneck due to the speed with which they perform their work;

- processes where tech can perform better (mainly because it has better sensors and/or better data processing capabilities);

- quality control processes where it is relatively easy to spot poor quality or it requires special sensors.

We could continue this list, but I think this is a good starting point.

A few important remarks:

- Although the state-of-the-art ML models successfully demonstrated abilities to perform complex tasks and even sets of tasks (generalized models), I would not recommend focusing on tasks that require above-average intelligence. In simple words, at first, it is better not to focus on automating the work of experts who are smarter than an average person. There are a few reasons for that, and all of them will make the production implementation of ML very expensive and complicated.

- Focus on customer and customer value first. I often heard, “Hey, we have a bunch of salespeople, and we want them to perform better. Because they bring customers, and customers bring money. We have tons of data in CRM and from support teams. Let’s AI there!”. It might be a good idea, but such projects could have very unpredictable outcomes. First of all, there are often far better candidates for automation. We will also be dealing with a learning curve. This means customers will see us unprepared, testing and fixing issues.

- This brings us to the third suggestion: start from support and production or, in general, from the process that is not client-facing or (sometimes even better) facing only loyal customers. Loyal customers would appreciate your effort to improve their experience and even help you test new tech.

Automation Backlog

When you’ve identified automation potential, you can prioritize all Points based on a few criteria:

- Value for customer and business,

- Implementation methodologies and tech stacks,

- Return on Investment (ideally, but not always feasible).

How you define value for customers and business depends on a particular Business Model. And you probably know it better. So, I will talk more about the second point—implementation methodologies and tech stacks.

Depending on a few factors, we can approach automation differently. We could use Machine Learning, Knowledge Representation, Reasoning, Robotic Process Automation, or other tools.

The decision is primarily driven by the number of variations of the required outcome – and if it is limited. If yes, and the space of possible variations is relatively small, we can use RPA or hardcode logic.

Another factor to consider is how much we want to control how programs think. In Machine Learning, the model defines how to update weights and focus its attention to produce the best results. We can continuously regulate the thinking process by creating a Decision Graph with models in edges and introducing feature weights and filters. Still, the model will have wider flexibility than RPA, for example. In ASP, for example, we create a knowledge base that consists of facts and their relationships, which the program will use to structure its thinking process and derive a conclusion. However, it is more limited because the program will not derive something it hasn’t seen before (in most cases).

This is a time for Proof of Concept, which will allow us to solve problems with specific approaches and stacks and see if they are possible. It will also validate the assumptions we made during the prioritization above.

But what is most essential—and what PoCs will help us define—is the data we need and what we mean by Good-Quality Data.

For most organizations, Big Data is not the way to go. They will not have petabytes of data about their users and their behavior, so good data should be their approach. I will write a separate article on the topic of Good Data.

Please read Part 2 here.