Docker

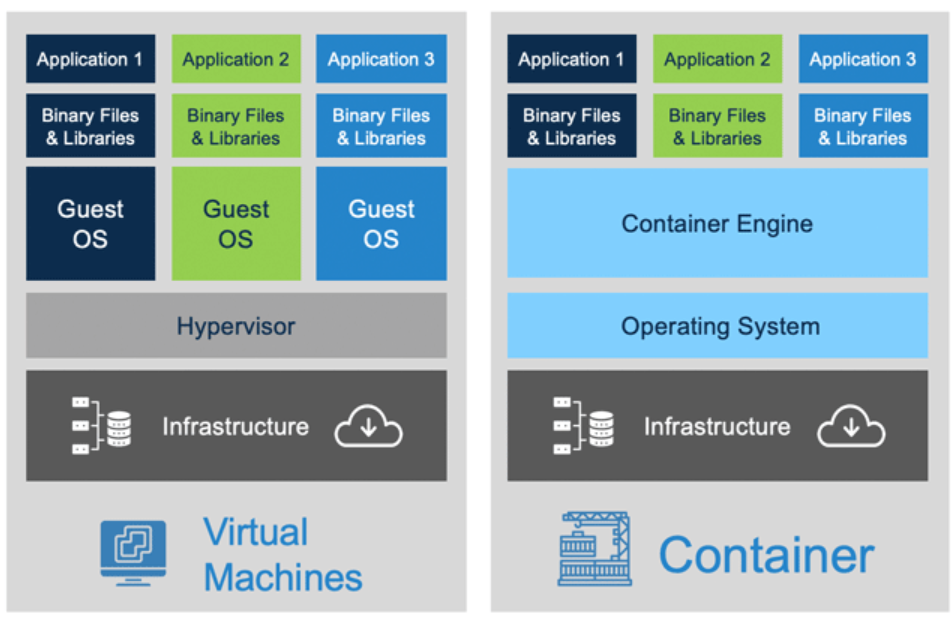

Docker is a Platform as a Service product that enables developers to package their applications into “Containers” and make them portable and deployable to almost any infrastructure. It reduces the burden of infrastructure setup and configuration and dramatically simplifies porting and deployment of the application.

The principle is relatively simple to grasp:

- Docker is installed on virtual or bare metal machine (Host);

- Docker engine creates virtualized environment which will run your Containers;

- Docker engine provides everything necessary for your Container to act as a standalone Virtual Environment;

- Container has all necessary software and configurations to run your workflow.

Of course, the description above is a very simplified representation of how Docker works, but it should help you understand it. The schema below demonstrates how virtualization happens:

There are many advantages to such an approach. In particular:

- Cost – we can encrease dinsity of our compute enviroment and use it more efficiently;

- Simplicity – our applications become easily portable and deployable;

- Reliability – consistency of environment increases stability of our application;

- Security – Docker encapsulates files inside the container and it is very hard to find them and decryp on a Host machine.

Let’s now talk about Docker objects and how we can use them.

Docker objects

The Architecture of Docker consist of three pieces:

- Docker Client,

- Docker Host,

- Docker Registry.

In simple words, Client is what you will be using to interact with Docker. Mostly it is a Command Line Interface (CLI) commands that we will be talking about in this article. But also you, for example, can use Graphical User Interface (GUI) in your Docker desktop.

Docker Registry is where we place our Container Images. We will talk about them in a minute. And Docker Host contains our Containers, Images, and so-called Docker Deamon, which will handle routing, communication, and organizational tasks. At this point, you don’t have to dive too deep into Docker Deamon’s work.

It is essential for you to is to understand Docker Objects and how to use and configure them:

Images

Images are blueprints that Docker uses to build your Containers. Images are similar to classes if you are familiar with the Object-Oriented Programming paradigm. Docker uses a special file (Dockerfile) to read instructions of how the Container should be built and configured and creates Docker Image.

You can store images either on your machine or in Registry from which you can pull the and build Containers.

Containers

You can instruct Docker to instantiate Container instances based on a specific Image. You will provide the necessary configurations in the CLI command, and Doker will do everything for you.

Volumes

TheCContainer creates an isolated environment for your application, which is good but flawed. When a Container has been destroyed, all files will also be destroyed. And often, we might want to keep some files so another Container can use them. Or we want a few Containers to use the same files and save them in one place, for example, when we parallel some computations or image processing.

We can instruct Docker to mount Volumes to the Container in such a case. Volumes can be Unnamed, Named, or Bind Mounts. Unnamed volumes are primarily used to secure a certain code, so it is not overwritten when we mount Bind Mount. I’ll show you the CLI commands, but we will not focus on these too much. Named Volumes are separate objects created during Container launch but will survive Container shut down and deletion. So we can use them to save some files and transfer them from one Container to another.

Bind Mounts is even more interesting because they allow us to synchronize a certain older on our computer (or Host in the cloud) with a certain folder inCContainer. If we update files in a folder on Host, they will be updated in the folder inside the Container. But you can also provide Container with read-only access.

Network

When you launch a Container, you specify port mapping, so the port your Container is listening to is mapped to a specific port on the Host. This way, we enable Container to receive requests from the outside world. An application running inside of the Container can send requests to the outside world just like it will do if it is deployed to a regular VM.

In cases when you want multiple Containers to be able to reach each other and communicate, there are various ways to achieve this goal. Quick and dirty, you could spot the internal IP of the required Container and send a request to it. But this, in general, is not recommended way of connecting Containers. Instead, you can create a Network and attach it to Containers. When containers share the same Network, it becomes easy for them to talk to each other. We will take a closer look at it below.

Secrets

Sometimes some pieces of information will not be hardcoded inside your application but supplied as variables during the launch of your app. For example, this could be credentials for a database or a port your application will listen to. For obvious reasons, you might not be willing to hardcode them, and you might not even know them because there will be few possible options.

In such cases, you will use Arguments and Environment Variables. Arguments are information that you pass to the Docker engine when you build Docker Image. Environment Variables are given during the launch of Container to use them as environment vars in your application.

Dockerfile

Dockerfile is a document containing instructions for Docker Engine on how to create Docker Image and basically what a future Container will be.

It has a layered structure, meaning that every line of this document represents a different level. You can think of it as layers of abstraction. When the layer above changes, Docker will re-run instructions from all subsequent layers (even if they remain the same). But it will not re-run instructions from the layers above if they do not change.

It starts from the base declaration, which tells Docker what would be the base Image for this Container.

FROM <base-image-name> #could be node It could be “node” or “mongo” or some other Image from hundreds of Images in Docker Hub or another repository.

Then we tell Docker the name of the working directory in our Container. All other instructions will apply to this folder primarily.

WORKDIR <folder-name> #could be /app The next helpful instruction is COPY. It will copy files from the Host to the Containers working directory.

COPY <from> <to> # for example . .There are a few other handy instructions.

VOLUME ["<unnamed-volume-folder-inside-container>"]

RUN <comand-during-launch-process> #like "npm install" to install node dependencies

CMD <comand-after-launch-process-is-finished> #to launch node server["node", "server.js"]Let’s review an example of a relatively simple Dockerfile below. I put my comments in #.

#use node as a base image

#this will include alpine linux and everything necessary to launch node server

FROM node

#/app is going to be our working directory

WORKDIR /app

#First of all we want to copy our package.json file to our working directory.

#Because it contains info about all packages that we have to install

#to run our application.

#So we want to move it first, and install dependencies.

#If we do it with the rest of the files, then every time we build new Image

#Docker will build it from scratch (which take time and memory).

#But Docker has awesome catching mechanisms which will prevent dependencies

#to be rebuilt every time when file changes at folder from where we copy files.

#This way they will only be re-installed when package.json is changed, not the other

#files.

COPY package*.json .

#install dependencies

RUN npm install

#copy the rest of the files to working directory

COPY . .

#this is not exactly instruction rather infomration and best practice

#it mostly depends on application which port to listen

EXPOSE 80

#we can create unnamed volume

#VOLUME [ "/app/feedback" ]

#and we launch our node server

CMD ["node", "server.js"]When using the version control system, if you have files containing secrets (more on this later), you might want Docker not to copy all the files to the working directory. In such a case, you can create a .dockerignore file and add all files and directories that you want Docker to skip.

Below is an example of how .dockerfile could look line in our basic node js app.

node_modules

Dockerfile

.gitDocker Image

To use Docker, you need to install Docker on your desktop. Please follow the instructions from the official Docker installation page. When you finish the installation process, please make sure you launch Docker on your computer (you should see its icon in your system tray).

When you’ve installed Docker, you can open your terminal and start playing with Docker CLI. We will not cover Docker desktop GUI in this guide, but you definitely should check it out.

Most Docker CLI commands consist of “docker” as an instruction for your computer to which program to give the task. Then you will type the name of the object (“image”, “container”, “network”, etc.) and what you want it to do.

Docker has excellent documentation on the website, but as well you can get info about a specific command or object right in your terminal. Add

--help to your command. Often it is helpful to look up some commands for a specific object. Like, for example:

docker image --help

#or

docker container --helpTry it yourself for volumes and networks.

Ok, good! Now we can start packaging and deploying our simple node js app. Please download the file below.

Unzip this file to the folder you will be working with. I assume you already have your favorite IDE. If yes, open your IDE and the folder with the application. If not, I would recommend Visual Studio Code. You can download and install it from the official VS Code download page. It is free has great UX and many valuable instruments. I have used it for a long time.

When you open your app folder in IDE, it is time to launch the terminal. VS Code and other IDEs have their own terminals. Alternatively, you can open your system terminal. There are official guidelines on opening a terminal on Mac and Windows.

So now, as we are all set, we can look at our Dockerfile.

#pulling node js base image

FROM node:12-alpine

# Adding build tools to make yarn install work on Apple silicon / arm64 machines

RUN apk add --no-cache python2 g++ make

#/app is going to be our working directory

WORKDIR /app

#copy all files from the folder where our Dockerfile is located to the working dir

COPY . .

#install dependencies

RUN yarn install --production

#launch our server

CMD ["node", "src/index.js"]As you can see, it is pretty simple. Yet it has a basic set of instructions to package and deploy our app. Now you can build your Docker Image using commands like the below:

docker build -t <name-for-your-image:label-for-this-version> <path-to-folder-with-Dockerfile> #usually Dockerfile is placed in the same folder as app, so this is just '.'You specify the name you want to give to your Image and tag optionally. Docker will add “latest” and save it like <image-name>:latest if you do not specify a tag.

After Docker has finished building the Image, you can check your Image now exists in the Image repository on your machine:

docker image lsThis command list all Docker Images you currently have in your system. You should see your Image in the list.

Read the following article of the series here – Docker Cheatsheet: Guideline That Will Make You Comfortable With Docker. Part 2.