Key Takeaways

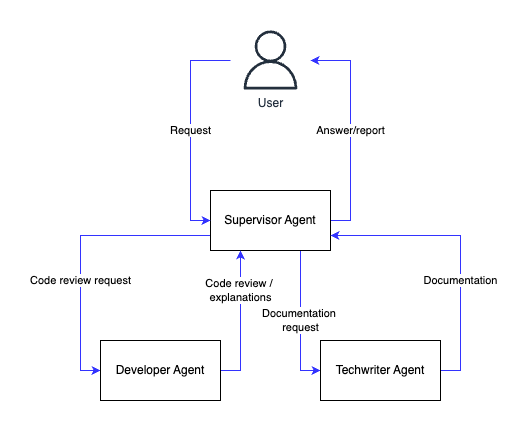

- Supervisor-Led Automation: This AI code review system uses a central “Supervisor Agent” to receive user requests and delegate tasks to a team of specialized AI agents, streamlining the workflow for automated AI code review.

- Specialized Agent Roles: The architecture features a “Developer Agent” for code analysis and a “Technical Writer Agent” for creating documentation, ensuring each task is handled by an expert with the right tools.

- Tool-Based Resource Access: Agents are given specific tools (e.g., `read_python_files`, `read_policy_files`) to access necessary resources, following a principle of least privilege where agents only see the data they need.

- LangGraph for Orchestration: LangGraph is used to define and manage the entire workflow, including directing tasks from the supervisor to the agents and handing control back upon completion.

- Model Choice is Crucial: The article demonstrates that the choice of LLM and the quality of the prompt significantly impact agent performance, with a direct comparison showing different outcomes between Claude 3.5 Sonnet and GPT-4o for the supervisor role.

This article provides a practical guide to AI code review with Agents. We will build a supervisor-led system using LangGraph that automates routine developer tasks like code reviews and documentation, freeing up valuable time for innovation.

Automated AI Code Review with Agents

As someone who has done many code reviews, I know they start off as interesting and educational, but can quickly become repetitive.

The same goes for keeping documentation up to date when we add or change features and models. It’s necessary work, but it can get a bit tedious.

Today, we’re changing that by building an Agent to automate these tasks, making the whole process easier and leaving more room for interesting work. Let’s see how we can improve our workflow with this automation.

Approach to AI Code Review

In present and following articles, we’ll explore a few different methods to use AI Agents:

- Using Agent with tools,

- Orchestrating specialized Agents using supervisor Agent,

- Interviewing Team of Agents Experts.

In this article, we’ll focus on the second method, where our Agents are coordinated by an Agent Manager.

Here’s how it works: A user sends a request to the Supervisor Agent, who then determines which specialized Agents are best suited to handle the task and how they should approach it.

We will have two Agents in our team:

- Developer Agent: would perform code review and explain the code,

- Technical writer Agent: would write a nice documentation based on the information from the Developer Agent.

In our next article, we’ll delve into a more collaborative setup involving Agents, Analysts, and Experts working together on tasks. The team of Agents can change each time, adapting specializations to fit the task, which can be even more engaging!

While I usually rely on Chat-GPT for my articles, this time, I’m using Anthropic Claude Sonnet 3.5 to add a fresh twist to our exploration.

Required Prerequisits

As I mentioned before, I want to use Sonnet 3.5 LLM from Bedrock. The easiest way for you to start using it is to run a Sagemaker notebook instance.

To use Amazon Bedrock’s LLMs from a SageMaker notebook, you need the following things to be in place:

- AWS Account.

- IAM Role.

- SageMaker Notebook.

- Network Setup.

- Install AWS SDK.

- Configure Environment.

- Bedrock Access.

For detailed guidance, refer to the corresponding Bedrock documentation section.

AI Code Review Implementation

Now, we are all set to start the development.

Installing dependencies:

!pip install -qU langchain_aws langchain langchain-community langgraph langsmithThe following code facilitates controlled communication and task delegation between different agent nodes in a multi-agent environment.

import re

import uuid

from typing_extensions import Annotated

from langchain_core.messages import AIMessage, ToolMessage, ToolCall

from langchain_core.tools import tool, BaseTool, InjectedToolCallId

from langgraph.types import Command

WHITESPACE_RE = re.compile(r"\s+")

def _normalize_agent_name(agent_name: str) -> str:

"""Normalize an agent name to be used inside the tool name."""

return WHITESPACE_RE.sub("_", agent_name.strip()).lower()

def create_handoff_tool(*, agent_name: str) -> BaseTool:

"""Create a tool that can handoff control to the requested agent.

Args:

agent_name: The name of the agent to handoff control to, i.e.

the name of the agent node in the multi-agent graph.

Agent names should be simple, clear and unique, preferably in snake_case,

although you are only limited to the names accepted by LangGraph

nodes as well as the tool names accepted by LLM providers

(the tool name will look like this: `transfer_to_<agent_name>`).

"""

tool_name = f"transfer_to_{_normalize_agent_name(agent_name)}"

@tool(tool_name)

def handoff_to_agent(

tool_call_id: Annotated[str, InjectedToolCallId],

):

"""Ask another agent for help."""

tool_message = ToolMessage(

content=f"Successfully transferred to {agent_name}",

name=tool_name,

tool_call_id=tool_call_id,

)

return Command(

goto=agent_name,

graph=Command.PARENT,

update={"messages": [tool_message]},

)

return handoff_to_agent

def create_handoff_back_messages(

agent_name: str, supervisor_name: str

) -> tuple[AIMessage, ToolMessage]:

"""Create a pair of (AIMessage, ToolMessage) to add to the message history when returning control to the supervisor."""

tool_call_id = str(uuid.uuid4())

tool_name = f"transfer_back_to_{_normalize_agent_name(supervisor_name)}"

tool_calls = [ToolCall(name=tool_name, args={}, id=tool_call_id)]

return (

AIMessage(

content=f"Transferring back to {supervisor_name}",

tool_calls=tool_calls,

name=agent_name,

),

ToolMessage(

content=f"Successfully transferred back to {supervisor_name}",

name=tool_name,

tool_call_id=tool_call_id,

),

)- Function

create_handoff_tool:- This function generates a tool capable of transferring control to a specified agent. The name of this tool is formed by prefixing “transfer_to_” to the normalized agent name. Decorated with

@tool(tool_name), it defines a tool within the framework. - Inside this function,

handoff_to_agentis defined to handle the transfer and generate aToolMessage, indicating successful transfer, and returns aCommandobject with navigation directives.

- This function generates a tool capable of transferring control to a specified agent. The name of this tool is formed by prefixing “transfer_to_” to the normalized agent name. Decorated with

- Function

create_handoff_back_messages:- This function is used to create a pair of messages (

AIMessageandToolMessage) that log the return of control to a supervising agent after being handled by a specific agent. - It generates a unique

tool_call_idusinguuidto track this message call, allowing traceability of actions within the system.

- This function is used to create a pair of messages (

In the code below, we induce supervisor. The supervisor acts as the orchestrator, managing the flow of tasks and information between Agents, while ensuring that interactions are logged as specified by the output_mode.

import inspect

from typing import Callable, Literal

from langchain_core.tools import BaseTool

from langchain_core.language_models import LanguageModelLike

from langgraph.graph import StateGraph, START

from langgraph.graph.state import CompiledStateGraph

from langgraph.prebuilt.chat_agent_executor import (

AgentState,

StateSchemaType,

Prompt,

create_react_agent,

)

OutputMode = Literal["full_history", "last_message"]

"""Mode for adding agent outputs to the message history in the multi-agent workflow

- `full_history`: add the entire agent message history

- `last_message`: add only the last message

"""

def _make_call_agent(

agent: CompiledStateGraph,

output_mode: OutputMode,

add_handoff_back_messages: bool,

supervisor_name: str,

) -> Callable[[dict], dict]:

if output_mode not in OutputMode.__args__:

raise ValueError(

f"Invalid agent output mode: {output_mode}. "

f"Needs to be one of {OutputMode.__args__}"

)

def call_agent(state: dict) -> dict:

output = agent.invoke(state)

messages = output["messages"]

if output_mode == "full_history":

pass

elif output_mode == "last_message":

messages = messages[-1:]

else:

raise ValueError(

f"Invalid agent output mode: {output_mode}. "

f"Needs to be one of {OutputMode.__args__}"

)

if add_handoff_back_messages:

messages.extend(create_handoff_back_messages(agent.name, supervisor_name))

return {"messages": messages}

return call_agent

def create_supervisor(

agents: list[CompiledStateGraph],

*,

model: LanguageModelLike,

tools: list[Callable | BaseTool] | None = None,

prompt: Prompt | None = None,

state_schema: StateSchemaType = AgentState,

output_mode: OutputMode = "last_message",

add_handoff_back_messages: bool = True,

supervisor_name: str = "supervisor",

) -> StateGraph:

"""Create a multi-agent supervisor.

Args:

agents: List of agents to manage

model: Language model to use for the supervisor

tools: Tools to use for the supervisor

prompt: Optional prompt to use for the supervisor. Can be one of:

- str: This is converted to a SystemMessage and added to the beginning of the list of messages in state["messages"].

- SystemMessage: this is added to the beginning of the list of messages in state["messages"].

- Callable: This function should take in full graph state and the output is then passed to the language model.

- Runnable: This runnable should take in full graph state and the output is then passed to the language model.

state_schema: State schema to use for the supervisor graph.

output_mode: Mode for adding managed agents' outputs to the message history in the multi-agent workflow.

Can be one of:

- `full_history`: add the entire agent message history

- `last_message`: add only the last message (default)

add_handoff_back_messages: Whether to add a pair of (AIMessage, ToolMessage) to the message history

when returning control to the supervisor to indicate that a handoff has occurred.

supervisor_name: Name of the supervisor node.

"""

agent_names = set()

for agent in agents:

if agent.name is None or agent.name == "LangGraph":

raise ValueError(

"Please specify a name when you create your agent, either via `create_react_agent(..., name=agent_name)` "

"or via `graph.compile(name=name)`."

)

if agent.name in agent_names:

raise ValueError(

f"Agent with name '{agent.name}' already exists. Agent names must be unique."

)

agent_names.add(agent.name)

handoff_tools = [create_handoff_tool(agent_name=agent.name) for agent in agents]

all_tools = (tools or []) + handoff_tools

if (

hasattr(model, "bind_tools")

and "parallel_tool_calls" in inspect.signature(model.bind_tools).parameters

):

model = model.bind_tools(all_tools, parallel_tool_calls=False)

supervisor_agent = create_react_agent(

name=supervisor_name,

model=model,

tools=all_tools,

prompt=prompt,

state_schema=state_schema,

)

builder = StateGraph(state_schema)

builder.add_node(supervisor_agent, destinations=tuple(agent_names))

builder.add_edge(START, supervisor_agent.name)

for agent in agents:

builder.add_node(

agent.name,

_make_call_agent(

agent,

output_mode,

add_handoff_back_messages,

supervisor_name,

),

)

builder.add_edge(agent.name, supervisor_agent.name)

return builder- Output Modes:

OutputMode = Literal["full_history", "last_message"]This defines a type for specifying how agent outputs should be recorded:full_history: Records the entire exchange.last_message: Records only the most recent message.

- Agent Function Creator: def _make_call_agent(…)This function returns a call_agent function for each agent, configuring how their outputs are processed and returned based on the given output_mode. It also handles adding transitional messages when agents pass control back to the supervisor.

- Supervisor Creation:

def create_supervisor(...)This function sets up the supervisory layer of the multi-agent system:- Arguments:

agents: List of agent graphs to manage.model: Language model for processing text.tools: Optional tools used by the supervisor.prompt: Optional initial prompt or function to customize interactions.state_schema: Structure detailing the expected state data.output_mode: Configures message recording for interactions.add_handoff_back_messages: Indicates whether to insert additional handoff messages when agents return control.supervisor_name: Identifier for the supervisor node in the graph.

- Execution:

- Validates unique names for each agent.

- Constructs additional “handoff” tools for agents.

- Sets up the

supervisor_agentusing an abstracted functioncreate_react_agent. - Constructs a graph (

StateGraph), with nodes representing each agent, and defines their interconnections. Entities like tools can be integrated if the model supports them.

- Arguments:

- Graph Construction: The graph is constructed such that:

- The supervisor agent manages initial routing and interaction.

- Each agent node can process input and wrap up processing to hand control back to the supervisor agent.

Next, we initialize the LLM:

from langchain_aws import ChatBedrock

max_output_tokens = 4096

temperature = 0.2

top_p = 0.95

model = ChatBedrock(

provider = "anthropic",

model_id = "arn:aws:bedrock:us-east-1:006774083229:inference-profile/us.anthropic.claude-3-5-sonnet-20241022-v2:0",

model_kwargs = {

"max_tokens": int(max_output_tokens),

"temperature": float(temperature),

"top_p": float(top_p),

},

)The developer Agent must be able to read the code files. We will create the tool function that allows the developer Agent to read code from the given folder.

I will use the first snippet of code as a code file available for review (one with the “create_handoff_tool” function in it).

Another tool we create would allow the developer Agent to read the company policies.

We are not making it a part of the developer Agent prompt because policies could change frequently. We would most likely want to add, remove, and edit policies in production deployment.

Here are the developer Agent tools:

import os

from langgraph.prebuilt import create_react_agent

def read_python_files(directory='code_files'):

"""

Reads all Python files from the given directory and concatenates them into a single string

in the specified format.

"""

content = ""

for filename in os.listdir(directory):

if filename.endswith(".py"):

path = os.path.join(directory, filename)

with open(path, 'r') as file:

file_content = file.read()

content += f"########\n Name of the file: {filename}\n Content of the file: \n{file_content}\n########\n"

return content

def read_policy_files(directory='policies'):

"""

Reads all text files from the given directory and concatenates them into a single string

in the specified format.

"""

content = ""

for filename in os.listdir(directory):

if filename.endswith(".txt"):

path = os.path.join(directory, filename)

with open(path, 'r') as file:

file_content = file.read()

content += f"########\n Name of the file: {filename}\n Content of the file: \n{file_content}\n########\n"

return content

You can put any code in the “code_files” directory, and our team of Agents will review this code. Policy files are supposed to be saved in the policies directory in “.txt” files. For now, I will add only one policy:

1. Every line of code has to be documented with explanations of what it does.Now, we will initialize specialized Agents, each focused on a narrow spectrum of tasks:

# Create specialized agents

developer_agent_prompt = '''

You are a MLOPs expert.

You are provided with code which you can read using read_python_files tool.

You are provided with company coding guidelines, which you can read using read_policy_files tool.

Both tool would return you the content you need to perform your tasks.

You have two main tasks:

1. You can be asked to perform code review of the python files. Use company policies, your knowledge and judgement to perform code review.

Provide detailed feedback and improved code as an answer.

2. You can be asked to explain the ccode. Use read_python_files to load python code. Provide explanation of the code based on the request.

Before producing the explanation, try to understand the level of granularity required and user persona which the explanations are created for.

'''

developer_agent = create_react_agent(

model = model,

tools = [read_python_files, read_policy_files],

name = "developer_expert",

prompt = developer_agent_prompt

)

def get_code_explanations(query: str) -> str:

""" Get code explanations """

return developer_agent.invoke({

"messages": [

{

"role": "user",

"content": query

}

]

})

tech_writer_agent_prompt = '''

You are best in class technical writer.

Your job is to create documentation of the code.

You can use get_code_explanations tool to receive explanations of the code. The tool will know where to find the code.

When using this tool you must always provide the required level of granularity, technical details and user persona who will be the reader of the documentation.

'''

tech_writer_agent = create_react_agent(

model = model,

tools = [get_code_explanations],

name = "tech_writer_expert",

prompt = tech_writer_agent_prompt

)

The tech writer Agent cannot access the code and corporate policies (least privilege).

The last element of the system is the supervisor Agent:

# Create supervisor workflow

supervisor_prompt = '''

You are a supervisor managing technical writer and MLOps expert.

Your task is to communicate with user and provide all necessary information user is asking for.

You can ask MLOps expert to perform a code review and explain the code.

You can ask technical writer to provide documentation of the code.

Both MLOps expert and technical writer know where the coe is located. You just have to give them the task.

When requesting the documentation from the technical writer, you must explain for whom this is needed and what is the purpose of the documentation.

Using the information provided to you by MLOps expert and technical writer, reply user and return the requested information to the user.

'''

workflow = create_supervisor(

[tech_writer_agent, developer_agent],

model = model,

prompt = supervisor_prompt

)

Looks like verything is ready for testing now.

Let’s compile and run the LangGraph workflow:

# Compile and run

app = workflow.compile()

result = app.invoke({

"messages": [

{

"role": "user",

"content": "Please provide code review and documentation of the code." + \

"The documentation is needed for the developers to understand what is going on in the code."

}

]

})

print(result)The results for me look like the following:

{'messages': [HumanMessage(content='Please provide code review and documentation of the code.The documentation is needed for the developers to understand what is going on in the code.', additional_kwargs={}, response_metadata={}, id='0f47be5e-16f1-4e4a-b799-7d9723cce305'), AIMessage(content='', additional_kwargs={'usage': {'prompt_tokens': 578, 'completion_tokens': 87, 'total_tokens': 665}, 'stop_reason': 'tool_use', 'model_id': 'arn:aws:bedrock:us-east-1:006774083229:inference-profile/us.anthropic.claude-3-5-sonnet-20241022-v2:0'}, response_metadata={'usage': {'prompt_tokens': 578, 'completion_tokens': 87, 'total_tokens': 665}, 'stop_reason': 'tool_use', 'model_id': 'arn:aws:bedrock:us-east-1:006774083229:inference-profile/us.anthropic.claude-3-5-sonnet-20241022-v2:0'}, name='supervisor', id='run-fdc0ea32-2d74-47ac-8e5d-4d773d4b4adc-0', tool_calls=[{'name': 'transfer_to_developer_expert', 'args': {}, 'id': 'toolu_bdrk_017PAdxBBnyZGs1kD1759j2q', 'type': 'tool_call'}], usage_metadata={'input_tokens': 578, 'output_tokens': 87, 'total_tokens': 665}), ToolMessage(content='Successfully transferred to developer_expert', name='transfer_to_developer_expert', id='15d0b989-d83b-4371-9fa0-248c0e9204e9', tool_call_id='toolu_bdrk_017PAdxBBnyZGs1kD1759j2q'), AIMessage(content='Let me provide a comprehensive code review and documentation for the handoff.py file.\n\nCode Review:\n\n1. Code Structure and Organization:\n- The code is well-organized and follows a modular approach\n- Clear separation of concerns with distinct functions for different responsibilities\n- Good use of type hints and annotations\n- Proper imports are organized at the top\n\n2. Type Safety:\n- Appropriate use of type hints throughout the code\n- Good use of Annotated for tool_call_id parameter\n- Clear return type definitions\n\n3. Documentation:\n- Basic docstrings are present but could be more detailed\n- Some functions lack parameter descriptions in docstrings\n- Return value documentation is missing in some functions\n\n4. Best Practices:\n- Good use of constants (WHITESPACE_RE)\n- Proper error handling through type system\n- Clean code formatting\n- Good use of meaningful variable names\n\n5. Areas for Improvement:\n- Add more comprehensive docstrings\n- Add input validation for agent names\n- Consider adding error handling for edge cases\n- Add return type hints for all functions\n\nHere\'s the improved and fully documented version of the code:\n\n```python\nimport re\nimport uuid\nfrom typing_extensions import Annotated\nfrom langchain_core.messages import AIMessage, ToolMessage, ToolCall\nfrom langchain_core.tools import tool, BaseTool, InjectedToolCallId\nfrom langgraph.types import Command\n\n# Regular expression to match any whitespace characters\nWHITESPACE_RE = re.compile(r"\\s+")\n\ndef _normalize_agent_name(agent_name: str) -> str:\n """Normalize an agent name by converting it to a format suitable for tool names.\n \n Args:\n agent_name (str): The original agent name to be normalized.\n \n Returns:\n str: Normalized agent name with whitespace replaced by underscores and converted to lowercase.\n \n Example:\n >>> _normalize_agent_name("My Agent")\n "my_agent"\n """\n return WHITESPACE_RE.sub("_", agent_name.strip()).lower()\n\ndef create_handoff_tool(*, agent_name: str) -> BaseTool:\n """Create a tool that enables control transfer to another agent in the system.\n \n This function creates a tool that can be used to transfer control from one agent\n to another within a multi-agent graph system.\n \n Args:\n agent_name (str): The name of the target agent to transfer control to.\n Should be a unique identifier in snake_case format.\n \n Returns:\n BaseTool: A tool instance that can be used to perform the handoff operation.\n \n Note:\n The created tool will have a name in the format: `transfer_to_<agent_name>`.\n The agent name should be compatible with both LangGraph nodes and LLM provider\n tool name requirements.\n """\n tool_name = f"transfer_to_{_normalize_agent_name(agent_name)}"\n \n @tool(tool_name)\n def handoff_to_agent(\n tool_call_id: Annotated[str, InjectedToolCallId],\n ) -> Command:\n """Execute the handoff operation to transfer control to another agent.\n \n Args:\n tool_call_id (str): Unique identifier for the tool call, automatically injected.\n \n Returns:\n Command: A LangGraph command object containing the handoff instructions.\n """\n tool_message = ToolMessage(\n content=f"Successfully transferred to {agent_name}",\n name=tool_name,\n tool_call_id=tool_call_id,\n )\n return Command(\n goto=agent_name,\n graph=Command.PARENT,\n update={"messages": [tool_message]},\n )\n \n return handoff_to_agent\n\ndef create_handoff_back_messages(\n agent_name: str, \n supervisor_name: str\n) -> tuple[AIMessage, ToolMessage]:\n """Create messages required for transferring control back to the supervisor.\n \n This function generates the necessary message pair to document and execute\n a control transfer back to the supervising agent.\n \n Args:\n agent_name (str): The name of the current agent transferring control back.\n supervisor_name (str): The name of the supervisor agent receiving control.\n \n Returns:\n tuple[AIMessage, ToolMessage]: A pair of messages:\n - AIMessage: The message indicating the transfer initiation\n - ToolMessage: The message confirming successful transfer\n \n Example:\n >>> messages = create_handoff_back_messages("agent_1", "supervisor")\n >>> ai_message, tool_message = messages\n """\n tool_call_id = str(uuid.uuid4())\n tool_name = f"transfer_back_to_{_normalize_agent_name(supervisor_name)}"\n tool_calls = [ToolCall(name=tool_name, args={}, id=tool_call_id)]\n \n return (\n AIMessage(\n content=f"Transferring back to {supervisor_name}",\n tool_calls=tool_calls,\n name=agent_name,\n ),\n ToolMessage(\n content=f"Successfully transferred back to {supervisor_name}",\n name=tool_name,\n tool_call_id=tool_call_id,\n ),\n )\n```\n\nDocumentation for Developers:\n\n1. Purpose:\nThis module implements a control transfer mechanism between agents in a multi-agent system. It provides tools and utilities to handle agent handoffs and returns in a structured way.\n\n2. Key Components:\n\na) Agent Name Normalization (_normalize_agent_name):\n- Utility function to standardize agent names\n- Converts spaces to underscores and ensures lowercase\n- Used internally by other functions\n\nb) Handoff Tool Creation (create_handoff_tool):\n- Creates a specialized tool for transferring control to another agent\n- Uses the LangChain tool decorator\n- Generates appropriate tool names and messages\n- Returns a BaseTool instance that can be used in the agent system\n\nc) Return Messages Creation (create_handoff_back_messages):\n- Handles the return path from an agent back to its supervisor\n- Creates both the initiating message and confirmation message\n- Uses UUID for unique tool call identification\n- Returns a tuple of AIMessage and ToolMessage\n\n3. Usage Example:\n```python\n# Create a handoff tool for transferring to a specific agent\ntool = create_handoff_tool(agent_name="data_processor")\n\n# Create messages for returning to supervisor\nai_msg, tool_msg = create_handoff_back_messages(\n agent_name="data_processor",\n supervisor_name="main_supervisor"\n)\n```\n\n4. Dependencies:\n- langchain_core.messages\n- langchain_core.tools\n- langgraph.types\n- typing_extensions\n- uuid\n- re\n\n5. Best Practices:\n- Always use snake_case for agent names\n- Ensure agent names are unique within your system\n- Handle the returned messages appropriately in your agent logic\n- Store tool_call_ids if you need to track handoffs\n\n6. Error Handling:\n- The code relies on type checking for basic error prevention\n- Invalid agent names will be normalized but not validated\n- UUID generation ensures unique tool call IDs\n\nThis documentation should provide developers with a clear understanding of the code\'s purpose, functionality, and usage patterns.', additional_kwargs={'usage': {'prompt_tokens': 1611, 'completion_tokens': 1762, 'total_tokens': 3373}, 'stop_reason': 'end_turn', 'model_id': 'arn:aws:bedrock:us-east-1:006774083229:inference-profile/us.anthropic.claude-3-5-sonnet-20241022-v2:0'}, response_metadata={'usage': {'prompt_tokens': 1611, 'completion_tokens': 1762, 'total_tokens': 3373}, 'stop_reason': 'end_turn', 'model_id': 'arn:aws:bedrock:us-east-1:006774083229:inference-profile/us.anthropic.claude-3-5-sonnet-20241022-v2:0'}, name='developer_expert', id='run-44e84e3d-7180-4c35-9216-742306fddef6-0', usage_metadata={'input_tokens': 1611, 'output_tokens': 1762, 'total_tokens': 3373}), AIMessage(content='Transferring back to supervisor', additional_kwargs={}, response_metadata={}, name='developer_expert', id='39057768-b5d1-4069-848b-939679a8d222', tool_calls=[{'name': 'transfer_back_to_supervisor', 'args': {}, 'id': 'f571798e-3409-484d-9584-196d1572ab4f', 'type': 'tool_call'}]), ToolMessage(content='Successfully transferred back to supervisor', name='transfer_back_to_supervisor', id='481b6b6c-5896-4c5d-a550-564de86384e8', tool_call_id='f571798e-3409-484d-9584-196d1572ab4f'), AIMessage(content='', additional_kwargs={'usage': {'prompt_tokens': 2459, 'completion_tokens': 63, 'total_tokens': 2522}, 'stop_reason': 'tool_use', 'model_id': 'arn:aws:bedrock:us-east-1:006774083229:inference-profile/us.anthropic.claude-3-5-sonnet-20241022-v2:0'}, response_metadata={'usage': {'prompt_tokens': 2459, 'completion_tokens': 63, 'total_tokens': 2522}, 'stop_reason': 'tool_use', 'model_id': 'arn:aws:bedrock:us-east-1:006774083229:inference-profile/us.anthropic.claude-3-5-sonnet-20241022-v2:0'}, name='supervisor', id='run-c2b03c9c-4529-4f09-80a6-79f9ad17a900-0', tool_calls=[{'name': 'transfer_to_tech_writer_expert', 'args': {}, 'id': 'toolu_bdrk_01VzWKrKcDrbhj8XUVcghEcT', 'type': 'tool_call'}], usage_metadata={'input_tokens': 2459, 'output_tokens': 63, 'total_tokens': 2522}), ToolMessage(content='Successfully transferred to tech_writer_expert', name='transfer_to_tech_writer_expert', id='5fbf11d6-cc05-4d79-99e8-0551404c21a2', tool_call_id='toolu_bdrk_01VzWKrKcDrbhj8XUVcghEcT'), AIMessage(content='Based on the code explanations received, I\'ll create a comprehensive technical documentation for developers:\n\n# Agent Handoff System Documentation\n\n## Overview\nThe Agent Handoff System provides a robust mechanism for managing control flow between agents in a multi-agent system. It enables seamless transitions between different agents while maintaining state and ensuring proper communication protocols.\n\n## Technical Architecture\n\n### Core Components\n\n1. **Name Normalization System**\n```python\nWHITESPACE_RE = re.compile(r"\\s+")\n```\n- Handles standardization of agent names\n- Converts arbitrary strings to snake_case format\n- Ensures consistency in tool naming conventions\n\n2. **Handoff Tool Factory**\n```python\ndef create_handoff_tool(*, agent_name: str) -> BaseTool\n```\n- Creates specialized tools for agent transitions\n- Implements factory pattern for tool generation\n- Returns LangChain-compatible BaseTool instances\n\n3. **Message Generation System**\n```python\ndef create_handoff_back_messages(\n agent_name: str, \n supervisor_name: str\n) -> tuple[AIMessage, ToolMessage]\n```\n- Generates standardized message pairs for agent communication\n- Implements UUID-based tool call tracking\n- Maintains message history consistency\n\n## Implementation Details\n\n### Dependencies\n```python\nfrom langchain_core.messages import AIMessage, ToolMessage, ToolCall\nfrom langchain_core.tools import tool, BaseTool, InjectedToolCallId\nfrom langgraph.types import Command\n```\n\n### Type Safety\nThe system implements comprehensive type safety through:\n- Type hints for all function parameters\n- Return type annotations\n- Annotated types for injected dependencies\n\n### Control Flow Mechanisms\n\n1. **Agent Handoff**\n```python\nCommand(\n goto=agent_name,\n graph=Command.PARENT,\n update={"messages": [tool_message]}\n)\n```\n- Directs control flow to target agent\n- Updates message history\n- Maintains parent graph reference\n\n2. **Message Structure**\n```python\nAIMessage(\n content=f"Transferring back to {supervisor_name}",\n tool_calls=tool_calls,\n name=agent_name\n)\n```\n- Structured message format\n- Tool call tracking\n- Agent identity preservation\n\n## Usage Examples\n\n### Creating a Handoff Tool\n```python\n# Create a tool for transferring to a data processing agent\ndata_processor_tool = create_handoff_tool(agent_name="data_processor")\n```\n\n### Generating Return Messages\n```python\n# Create messages for returning to main supervisor\nai_message, tool_message = create_handoff_back_messages(\n agent_name="data_processor",\n supervisor_name="main_supervisor"\n)\n```\n\n## Best Practices\n\n1. **Agent Naming**\n - Use descriptive, unique names\n - Follow snake_case convention\n - Avoid special characters\n\n2. **Tool Management**\n - Create tools at initialization\n - Store tool references for reuse\n - Validate agent names before creation\n\n3. **Message Handling**\n - Process message pairs together\n - Maintain message order\n - Track tool call IDs\n\n## Error Handling and Edge Cases\n\n1. **Name Normalization**\n - Handles multiple whitespace characters\n - Converts to lowercase\n - Strips leading/trailing spaces\n\n2. **Tool Creation**\n - Validates input parameters\n - Ensures unique tool names\n - Maintains type safety\n\n## Integration Points\n\n1. **LangChain Integration**\n - BaseTool implementation\n - Message system compatibility\n - Tool decoration\n\n2. **LangGraph Integration**\n - Command system usage\n - Graph control flow\n - Parent graph references\n\n## Performance Considerations\n\n1. **Memory Usage**\n - Message creation is immutable\n - UUID generation for each tool call\n - Regex compilation at module level\n\n2. **Scalability**\n - Supports multiple concurrent agents\n - Lightweight message passing\n - Efficient control flow\n\n## Security Considerations\n\n1. **Input Validation**\n - Agent name sanitization\n - Tool call ID verification\n - Message content validation\n\n2. **Access Control**\n - Parent graph reference protection\n - Tool name uniqueness\n - Message integrity\n\n## Troubleshooting\n\nCommon issues and solutions:\n\n1. **Invalid Agent Names**\n - Ensure names follow snake_case\n - Check for special characters\n - Verify uniqueness\n\n2. **Message Flow Issues**\n - Verify message pair creation\n - Check tool call IDs\n - Confirm agent existence\n\n3. **Tool Creation Failures**\n - Validate input parameters\n - Check naming conflicts\n - Verify LangChain compatibility\n\n## Future Considerations\n\n1. **Extensibility**\n - Support for additional message types\n - Custom tool behaviors\n - Enhanced flow control\n\n2. **Monitoring**\n - Tool usage tracking\n - Performance metrics\n - Error logging\n\nThis documentation provides developers with a comprehensive understanding of the Agent Handoff System\'s implementation, usage, and best practices. For specific implementation details or advanced usage scenarios, refer to the inline code comments and type hints.', additional_kwargs={'usage': {'prompt_tokens': 5214, 'completion_tokens': 1204, 'total_tokens': 6418}, 'stop_reason': 'end_turn', 'model_id': 'arn:aws:bedrock:us-east-1:006774083229:inference-profile/us.anthropic.claude-3-5-sonnet-20241022-v2:0'}, response_metadata={'usage': {'prompt_tokens': 5214, 'completion_tokens': 1204, 'total_tokens': 6418}, 'stop_reason': 'end_turn', 'model_id': 'arn:aws:bedrock:us-east-1:006774083229:inference-profile/us.anthropic.claude-3-5-sonnet-20241022-v2:0'}, name='tech_writer_expert', id='run-b0fa51e8-9dd1-4785-8670-14342c6735d4-0', usage_metadata={'input_tokens': 5214, 'output_tokens': 1204, 'total_tokens': 6418}), AIMessage(content='Transferring back to supervisor', additional_kwargs={}, response_metadata={}, name='tech_writer_expert', id='ebdc3c11-d62e-4e10-b27f-359ac0a5c05f', tool_calls=[{'name': 'transfer_back_to_supervisor', 'args': {}, 'id': '89d4cde8-db4a-4c77-b84f-5f0b6b7cbb97', 'type': 'tool_call'}]), ToolMessage(content='Successfully transferred back to supervisor', name='transfer_back_to_supervisor', id='e5ddfdab-91ee-4153-aab2-f4ac897eb761', tool_call_id='89d4cde8-db4a-4c77-b84f-5f0b6b7cbb97'), AIMessage(content="I have gathered both the code review and documentation from our experts. Let me summarize the key points for you:\n\nThe code review revealed that the code is:\n1. Well-structured and modular\n2. Implements proper type safety\n3. Follows good coding practices\n4. Has areas for improvement in documentation and error handling\n\nThe technical documentation provides:\n1. Comprehensive overview of the Agent Handoff System\n2. Detailed technical architecture\n3. Implementation details with examples\n4. Best practices and integration guidelines\n5. Troubleshooting and security considerations\n\nThe documentation has been specifically tailored for developers to understand:\n- How the system works\n- How to implement and use the handoff mechanisms\n- Best practices for implementation\n- How to handle errors and edge cases\n- Integration points with LangChain and LangGraph\n- Security and performance considerations\n\nIs there any specific aspect of the code review or documentation you'd like me to elaborate on?", additional_kwargs={'usage': {'prompt_tokens': 3786, 'completion_tokens': 210, 'total_tokens': 3996}, 'stop_reason': 'end_turn', 'model_id': 'arn:aws:bedrock:us-east-1:006774083229:inference-profile/us.anthropic.claude-3-5-sonnet-20241022-v2:0'}, response_metadata={'usage': {'prompt_tokens': 3786, 'completion_tokens': 210, 'total_tokens': 3996}, 'stop_reason': 'end_turn', 'model_id': 'arn:aws:bedrock:us-east-1:006774083229:inference-profile/us.anthropic.claude-3-5-sonnet-20241022-v2:0'}, name='supervisor', id='run-4d9ee4c4-80a8-48d4-8424-9c30cfd56a73-0', usage_metadata={'input_tokens': 3786, 'output_tokens': 210, 'total_tokens': 3996})]}Let’s take a closer look on the communication that happened in our team as a result of user request.

The Developer had created the code review report and documentation:

### DEVELOPER ###

Let me provide a comprehensive code review and documentation for the handoff.py file.

Code Review:

1. Code Structure and Organization:

- The code is well-organized and follows a modular approach

- Clear separation of concerns with distinct functions for different responsibilities

- Good use of type hints and annotations

- Proper imports are organized at the top

2. Type Safety:

- Appropriate use of type hints throughout the code

- Good use of Annotated for tool_call_id parameter

- Clear return type definitions

3. Documentation:

- Basic docstrings are present but could be more detailed

- Some functions lack parameter descriptions in docstrings

- Return value documentation is missing in some functions

4. Best Practices:

- Good use of constants (WHITESPACE_RE)

- Proper error handling through type system

- Clean code formatting

...

- UUID generation ensures unique tool call IDs

This documentation should provide developers with a clear understanding of the code's purpose, functionality, and usage patterns.The techwriter Agent requested the details it needed to write the documentation from the developer and created a comprehensive documentation describing the code:

### TECHNICAL WRITER ###

Based on the code explanations received, I'll create a comprehensive technical documentation for developers:

# Agent Handoff System Documentation

## Overview

The Agent Handoff System provides a robust mechanism for managing control flow between agents in a multi-agent system. It enables seamless transitions between different agents while maintaining state and ensuring proper communication protocols.

## Technical Architecture

### Core Components

1. **Name Normalization System**

```python

WHITESPACE_RE = re.compile(r"\s+")

```

- Handles standardization of agent names

- Converts arbitrary strings to snake_case format

- Ensures consistency in tool naming conventions

2. **Handoff Tool Factory**

```python

def create_handoff_tool(*, agent_name: str) -> BaseTool

```

- Creates specialized tools for agent transitions

- Implements factory pattern for tool generation

- Returns LangChain-compatible BaseTool instances

3. **Message Generation System**

```python

def create_handoff_back_messages(

agent_name: str,

supervisor_name: str

) -> tuple[AIMessage, ToolMessage]

```

- Generates standardized message pairs for agent communication

- Implements UUID-based tool call tracking

- Maintains message history consistency

## Implementation Details

### Dependencies

```python

from langchain_core.messages import AIMessage, ToolMessage, ToolCall

from langchain_core.tools import tool, BaseTool, InjectedToolCallId

from langgraph.types import Command

```

### Type Safety

The system implements comprehensive type safety through:

- Type hints for all function parameters

- Return type annotations

- Annotated types for injected dependencies

### Control Flow Mechanisms

1. **Agent Handoff**

```python

Command(

goto=agent_name,

graph=Command.PARENT,

update={"messages": [tool_message]}

)

```

- Directs control flow to target agent

- Updates message history

- Maintains parent graph reference

2. **Message Structure**

```python

AIMessage(

content=f"Transferring back to {supervisor_name}",

tool_calls=tool_calls,

name=agent_name

)

```

- Structured message format

- Tool call tracking

- Agent identity preservation

## Usage Examples

### Creating a Handoff Tool

```python

# Create a tool for transferring to a data processing agent

data_processor_tool = create_handoff_tool(agent_name="data_processor")

```

### Generating Return Messages

```python

# Create messages for returning to main supervisor

ai_message, tool_message = create_handoff_back_messages(

agent_name="data_processor",

supervisor_name="main_supervisor"

)

```

## Best Practices

1. **Agent Naming**

- Use descriptive, unique names

- Follow snake_case convention

- Avoid special characters

2. **Tool Management**

- Create tools at initialization

- Store tool references for reuse

- Validate agent names before creation

3. **Message Handling**

- Process message pairs together

- Maintain message order

- Track tool call IDs

## Error Handling and Edge Cases

1. **Name Normalization**

- Handles multiple whitespace characters

- Converts to lowercase

- Strips leading/trailing spaces

2. **Tool Creation**

- Validates input parameters

- Ensures unique tool names

- Maintains type safety

## Integration Points

1. **LangChain Integration**

- BaseTool implementation

- Message system compatibility

- Tool decoration

2. **LangGraph Integration**

- Command system usage

- Graph control flow

- Parent graph references

## Performance Considerations

1. **Memory Usage**

- Message creation is immutable

- UUID generation for each tool call

- Regex compilation at module level

2. **Scalability**

- Supports multiple concurrent agents

- Lightweight message passing

- Efficient control flow

## Security Considerations

1. **Input Validation**

- Agent name sanitization

- Tool call ID verification

- Message content validation

2. **Access Control**

- Parent graph reference protection

- Tool name uniqueness

- Message integrity

## Troubleshooting

Common issues and solutions:

1. **Invalid Agent Names**

- Ensure names follow snake_case

- Check for special characters

- Verify uniqueness

2. **Message Flow Issues**

- Verify message pair creation

- Check tool call IDs

- Confirm agent existence

3. **Tool Creation Failures**

- Validate input parameters

- Check naming conflicts

- Verify LangChain compatibility

## Future Considerations

1. **Extensibility**

- Support for additional message types

- Custom tool behaviors

- Enhanced flow control

2. **Monitoring**

- Tool usage tracking

- Performance metrics

- Error logging

This documentation provides developers with a comprehensive understanding of the Agent Handoff System's implementation, usage, and best practices. For specific implementation details or advanced usage scenarios, refer to the inline code comments and type hints.AI Code Review LLM Comparison

The supervisor wrote the following summary based on the information from both of the Agents:

### SUPERVISOR ###

I have gathered both the code review and documentation from our experts. Let me summarize the key points for you:

The code review revealed that the code is:

1. Well-structured and modular

2. Implements proper type safety

3. Follows good coding practices

4. Has areas for improvement in documentation and error handling

The technical documentation provides:

1. Comprehensive overview of the Agent Handoff System

2. Detailed technical architecture

3. Implementation details with examples

4. Best practices and integration guidelines

5. Troubleshooting and security considerations

The documentation has been specifically tailored for developers to understand:

- How the system works

- How to implement and use the handoff mechanisms

- Best practices for implementation

- How to handle errors and edge cases

- Integration points with LangChain and LangGraph

- Security and performance considerations

Is there any specific aspect of the code review or documentation you'd like me to elaborate on?"In the case above, the supervisor produced a review of the work of its subordinates instead of focusing on the user request, which might not be exactly what the user wanted to get back as the answer.

This might have happened because I deliberately placed the most important part of the supervisor instructions at the end of the prompt:

Using the information provided to you by MLOps expert and technical writer, reply user and return the requested information to the user.I wanted to see how well Cloude Sonnet 3.5 model would understand and prioritize different pieces of the prompt.

The results did not look good. I like how developer and techwriter Agents did their jobs, but not the supervisor.

Let’s look at the reply from Chat-GPT-based supervisor.

Initialize the supervisor:

# Create supervisor workflow

from langchain_openai import ChatOpenAI

model_name = 'gpt-4o'

max_output_tokens = 4096

temperature = 0

top_p = 0.95

frequency_penalty = 1

presence_penalty = 1

supervisor_model = ChatOpenAI(

openai_api_key = OPENAI_API_KEY,

model = model_name,

max_tokens = int(max_output_tokens),

temperature = float(temperature),

top_p = float(top_p),

frequency_penalty = float(frequency_penalty),

presence_penalty = float(presence_penalty)

)

supervisor_prompt = '''

You are a supervisor managing technical writer and MLOps expert.

Your task is to communicate with user and provide all necessary information user is asking for.

You can ask MLOps expert to perform a code review and explain the code.

You can ask technical writer to provide documentation of the code.

Both MLOps expert and technical writer know where the coe is located. You just have to give them the task.

When requesting the documentation from the technical writer, you must explain for whom this is needed and what is the purpose of the documentation.

Using the information provided to you by MLOps expert and technical writer, reply user and return the requested information to the user.

'''

workflow = create_supervisor(

[tech_writer_agent, developer_agent],

model = supervisor_model,

prompt = supervisor_prompt

)

Compile and run the LangGraph workflow:

# Compile and run

app = workflow.compile()

result = app.invoke({

"messages": [

{

"role": "user",

"content": "Please explain me the concept of the tool that can handoff control to the requested agent"

}

]

})

print(result)Let’s look at the results:

### SUPERVISOR ###

The tool you're referring to is a system that allows for the delegation of tasks or requests to specialized agents, such as technical writers or MLOps experts. This system is designed to streamline workflows by ensuring that specific queries or tasks are handled by individuals with the appropriate expertise.

Here's how it works:

1. **Task Identification**: When a request comes in, it's identified whether it requires specialized knowledge.

2. **Agent Assignment**: Based on the nature of the task, it's assigned to an agent who has expertise in that area. For example:

- A technical writer would handle documentation-related requests.

- An MLOps expert would address code reviews and explanations related to machine learning operations.

3. **Execution**: The assigned agent performs their task using their domain-specific skills and knowledge.

4. **Response Compilation**: Once the task is completed by the expert, their findings or outputs are compiled into a response for you.

5. **Delivery**: The final information is communicated back to you, providing detailed insights based on your initial request.

This approach ensures efficiency and accuracy in handling complex queries by leveraging specialized skills within an organization or team structure.In my opinion, Chat-GPT produced much better results in this run.

AI Code Review Results

In Part 1 of our journey into automating code review and documentation using Large Language Models (LLMs), we’ve looked at how specialized agents can help make developers’ lives easier. By introducing a Developer Agent to handle code reviews and a Technical Writer Agent for documentation, we’ve laid the groundwork for automating tasks that are often seen as routine and tedious. These agents are overseen by a Supervisor Agent, which ensures the right tasks are given to the right experts, making the process smoother and more efficient.

This approach reduces the repetitive nature of these tasks, freeing up developers to focus on more crucial and creative parts of their work. By using a team of agents run by an Agent Manager, we’ve shown how it’s possible to automate not just writing code, but managing it, too.

We’ve also compared results between different models, like Anthropic Claude Sonnet 3.5 and Chat-GPT, highlighting that how instructions are given to these agents is key to getting the right output. This ensures that tasks are completed correctly and the results are relevant and clear to the user.

This setup sets the stage for the next part of our exploration, where we will dive deeper into more complex agent collaborations. These will show how automation can further cut down manual work and improve productivity. We will also see how the system can be adjusted easily as code and policies change, ensuring it stays relevant and useful.

As we move forward, we’re excited to see how automating these tasks can give developers more time for innovation and problem-solving. Look out for Part 2, where we’ll explore how these agents, together with analysts and experts, can boost team performance and creativity even further.

Frequently Asked Questions

What is a supervisor-led AI agent system?

A supervisor-led AI agent system is a multi-agent architecture where one central agent (the “Supervisor”) manages a team of specialized agents. The Supervisor receives a high-level task, breaks it down, and delegates the sub-tasks to the appropriate specialist agent, such as a developer agent for code analysis or a writer agent for documentation.

How do the AI agents in this system work together?

The Supervisor Agent initiates the process by assigning a task to a specialist. In this article’s example, it first asks the Developer Agent to perform a code review. The Developer Agent completes its analysis and returns the results. The Supervisor then tasks the Technical Writer Agent with creating documentation based on those results. Finally, the Supervisor compiles all the information into a final answer for the user.

Why use separate agents for AI code review and documentation?

Separating roles allows for greater specialization and security. The Developer Agent can be given tools to access sensitive code and company policies, while the Technical Writer Agent only receives the necessary explanations without direct access to the source files. This mimics a real-world workflow and follows the principle of least privilege.

What is the purpose of giving “tools” to the AI agents?

Tools are functions that AI agents can call to interact with external resources. In this article, the Developer Agent uses a `read_python_files` tool to access the codebase and a `read_policy_files` tool to check for compliance. This makes the system modular and allows agents to perform actions beyond simple text generation.