Key Takeaways

- Go Beyond Monolithic Agents: A single agent can be a generalist, but a team of specialized AI agents in multi-agent AI system provides deeper, more comprehensive insights, similar to a real-world panel of experts.

- Interview-Based Architecture: This system uses a dynamic “interview” workflow where specialist AI personas (e.g., security, performance, compliance) are generated and then queried individually to provide focused feedback.

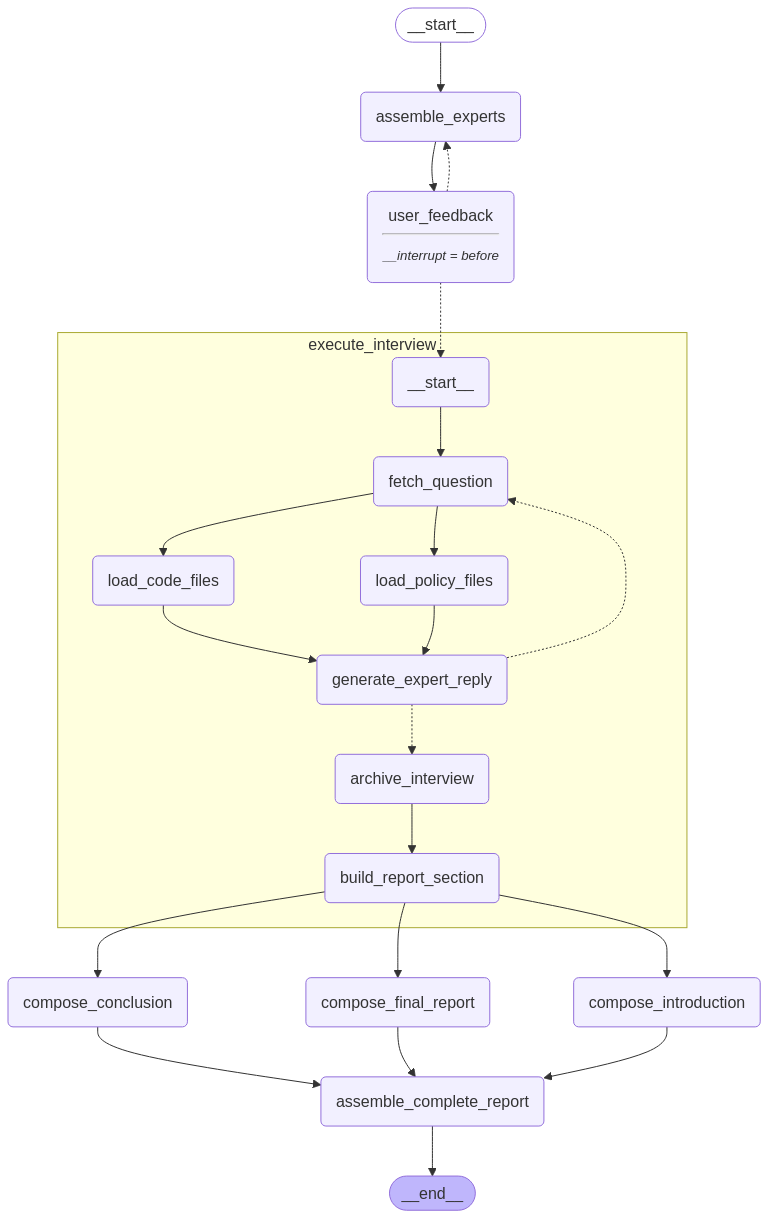

- LangGraph for Complex Collaboration: LangGraph is used to orchestrate the parallel interviews with each expert agent and then manage the final assembly of their individual reports into one cohesive document.

- Human-in-the-Loop for Quality Control: The system includes an interrupt step that allows a human to review and approve the generated expert personas before the main review process begins, ensuring the analysis is correctly focused.

In our previous article, we looked at a supervisor-based architecture where a manager agent coordinated specialized agents for code review and documentation. Today’s solution takes this concept further by implementing an interview-based multi-agent AI system where multiple expert personas collaborate to provide comprehensive insights.

This approach mimics real-world code reviews where different specialists (security experts, performance engineers, compliance officers) each bring unique perspectives to the table.

Multi-Agent AI System Approach

The multi-agent AI system uses LangGraph to orchestrate a workflow that:

- Dynamically creates expert personas based on the code review topic

- Conducts structured interviews with each expert

- Compiles their insights into a cohesive final report

Let’s break down how this works:

Experts Generation Phase

Rather than using fixed agent roles, the system creates tailored expert personas based on the specific code review topic/request.

As the analyst personas would define most of what happens next, we’re adding human feedback in this phase. This would ensure the proper mix of experts and comprehensive coverage of the review topic.

This dynamism allows the system to dynamically adapt to different review needs without the need for reconfiguration.

The Interview Workflow

Once the experts are generated, the system conducts interviews with each one:

- An analyst asks questions focused on their specific area;

- The system retrieves relevant code and policy files as context;

- The expert provides insights based strictly on the retrieved files;

- The conversation continues until the interview is complete;

- The system composes a report section based on the insights.

Experts must cite specific file references, ensuring their insights are grounded in the actual code and policies.

Report Assembly

The system then combines individual expert reports into a comprehensive report with a proper introduction, body content, and conclusion.

Each section of the report (introduction, main body, conclusion) is prepared in parallel and then assembled into the final report.

Multi-Agent AI System Solution

Let’s now dive into the implementation of the solution.

First of all, we install the necessary dependencies:

pip install -q langchain_openai langgraph langchain-core langchain-communityImport libraries and initialize the LLM (we use Chat-GPT in this article):

import os

import operator

import glob

from pydantic import BaseModel, Field

from typing import Annotated, List

from typing_extensions import TypedDict

from IPython.display import Image, display

from langchain_core.messages import AIMessage, HumanMessage, SystemMessage, get_buffer_string

from langchain_openai import ChatOpenAI

from langgraph.constants import Send

from langgraph.graph import END, MessagesState, START, StateGraph

from langgraph.checkpoint.memory import MemorySaver

os.environ['OPENAI_API_KEY'] = '<YOUR_API_KEY>'

# Initialize our LLM (Language Model) with specific settings.

chatLLM = ChatOpenAI(model="gpt-4o", temperature=0)As different elements of the graph (LangGraph graph) would rely on each other, we must enable the configuration of the structured output. I prefer the Pydantic approach because it is clean and clear.

# Data Models & Type Definitions

# Define a model for representing an expert with specific attributes.

class ReviewExpert(BaseModel):

affiliation: str = Field(description="Primary affiliation of the expert.")

name: str = Field(description="Name of the expert.")

role: str = Field(description="Role of the expert in the context of the topic.")

description: str = Field(description="Description of the expert focus including concerns such as code quality, security, compliance with company guidelines, etc.")

@property

def profile(self) -> str:

"""Create a string representation of the expert's profile."""

return f"Name: {self.name}\nRole: {self.role}\nAffiliation: {self.affiliation}\nFocus: {self.description}\n"

# Define a model for handling multiple experts' perspectives.

class ExpertPerspectives(BaseModel):

analysts: List[ReviewExpert] = Field(description="Comprehensive list of experts with their roles and affiliations.")

# Define a type for capturing the state required to generate review experts.

class GenerateReviewState(TypedDict):

topic: str # The code review / documentation topic provided by the user.

max_analysts: int # Number of experts to be generated.

human_analyst_feedback: str # Optional feedback from a human to guide expert creation.

analysts: List[ReviewExpert] # List of generated experts.

# Define a type for managing the interview dialog state.

class InterviewDialogState(MessagesState):

max_num_turns: int # Number of turns in the conversation.

context: Annotated[list, operator.add] # Source documents such as code files and policies.

analyst: ReviewExpert # The expert conducting the interview.

interview: str # The transcript of the interview.

sections: list # Final sections for the report.

# Define a type for the overall review graph state.

class ReviewGraphState(TypedDict):

topic: str # Overall topic for code review/documentation.

max_analysts: int # Number of experts.

human_analyst_feedback: str # Feedback for refining sub-topics.

analysts: List[ReviewExpert] # List of generated experts.

sections: Annotated[list, operator.add] # Interview sections for the final report.

introduction: str # Introduction of the report.

content: str # Main content of the report.

conclusion: str # Conclusion of the report.

final_report: str # Consolidated final report.Now we can carry on implementing the main logic.

Our multi-agent AI system solution consists of two sub-graphs:

- the interview subgraph where analysts perform the interview of an expert who knows the code,

- assembling a graph where the technical writer combines all interviews into the final report.

The interview flow is pretty simple: the first virtual person (analyst) is asking questions to the second virtual person (expert) about the code in accordance with the analyst’s areas of interest.

We set the maximum number of questions an analyst can ask to 2 for now. You can change this by updating the following line:

max_turns = state.get('max_num_turns', 2)When the analyst is satisfied with the replies they got or a limit of messages has been reached, the interview is finished.

The interview transcript will be saved to the graph state so the technical writer can use this context to compose the final report.

At the end of the interview, the conversation will be summarized in a report.

In each specific case, we can decide how many experts we would like to have and the focus of the analysis. The interview subgraph would be launched for each expert and executed in parallel.

When all the interviews are finished, we will launch the assembling part of the graph, where the technical writer persona will analyse the interviews and create the final report.

Creating different sections of the report would happen in parallel to save time.

If you are new to LangGraph, please read this part in the official documentation. It will help you understand the concepts we are discussing below.

Let’s create a few functions that we would use as nodes in graphs:

# Defining Node Functions

# Template for creating expert personas with specific code review instructions.

reviewer_instructions_template = """

You are tasked with creating a set of AI analyst personas for a code review and documentation of the code.

First, review the provided code review and documentation topics to cover: {topic}

Examine any human feedback that has been optionally provided to guide creation of the analysts: {human_analyst_feedback}

Determine the most interesting sub-topics based upon the code review topic.

Pick the top {max_analysts} sub-topics.

Assign one analyst to each sub-topic, where each analyst’s persona reflects a focus on one of these areas."""

def assemble_experts(state: GenerateReviewState):

"""Create expert personas based on code review topics and optional feedback."""

subject = state['topic'] # The topic to focus on.

expert_count = state['max_analysts'] # Number of experts to generate.

feedback = state.get('human_analyst_feedback', '') # Optional feedback for guidance.

# Use LLM to generate structured expert personas.

structured_model = chatLLM.with_structured_output(ExpertPerspectives)

sys_msg = reviewer_instructions_template.format(topic=subject,

human_analyst_feedback=feedback,

max_analysts=expert_count)

experts = structured_model.invoke([SystemMessage(content=sys_msg)] +

[HumanMessage(content="Generate the set of analysts.")])

return {"analysts": experts.analysts}

def user_feedback(state: GenerateReviewState):

"""Placeholder node for human feedback intervention."""

pass

# Template for generating questions during the interview.

question_instructions = """

You are an analyst tasked with interviewing an expert on code quality and documentation.

Your goal is to extract interesting and specific insights on your assigned focus.

Here is your area of focus and the goals associated with it: {goals}

Begin by introducing yourself appropriately (using a fitting name for your persona), then ask your question related to the assigned focus.

Continue with the interview to drill down on critical aspects. When you are satisfied with your understanding, end the interview with: "Thank you so much for your help!"

Remain in character and ensure that your questions are clear and detailed."""

def create_interview_query(state: InterviewDialogState):

"""

Generate a question for a code review interview.

Args:

state (InterviewDialogState): The current interview state containing message history and context.

Returns:

dict: Dictionary containing newly generated interview question messages.

"""

reviewer = state["analyst"] # Current expert conducting the interview.

msgs = state["messages"] # Message history for the interview dialog.

# Format system message with reviewer information and generate a question.

sys_msg = question_instructions.format(goals=reviewer.profile)

interview_query = chatLLM.invoke([SystemMessage(content=sys_msg)] + msgs)

return {"messages": [interview_query]}

def retrieve_code_files(state: InterviewDialogState):

"""

Retrieve Python code files from the 'code_files' directory.

Args:

state (InterviewDialogState): The current interview state, used to update context.

Returns:

dict: Dictionary containing code content in a formatted context string.

"""

python_paths = glob.glob("code_files/*.py") # List of file paths for Python code files.

file_snippets = [] # Collection for storing formatted file content.

for path in python_paths: # Iterate over each Python file path.

try:

with open(path, 'r') as f: # Open each file for reading.

content = f.read() # Read the file content.

file_snippets.append(f'<CodeFile path="{path}">\n{content}\n</CodeFile>') # Format and store file content.

except Exception as err:

file_snippets.append(f'<CodeFile path="{path}">Error reading file: {err}</CodeFile>') # Store error message if file fails.

# Combine all formatted file snippets with separators.

joined_codes = "\n\n---\n\n".join(file_snippets)

return {"context": [joined_codes]}

def retrieve_policy_files(state: InterviewDialogState):

"""

Retrieve company policy files from the 'policies' directory.

Args:

state (InterviewDialogState): The current interview state, used to update context.

Returns:

dict: Dictionary containing policy content in a formatted context string.

"""

policy_paths = glob.glob("policies/*.txt") # List of file paths for policy text files

policy_snippets = [] # Collection for storing formatted policy content.

for path in policy_paths: # Iterate over each policy file path.

try:

with open(path, 'r') as f: # Open each file for reading.

content = f.read() # Read the file content.

policy_snippets.append(f'<PolicyFile path="{path}">\n{content}\n</PolicyFile>') # Format and store file content.

except Exception as err:

policy_snippets.append(f'<PolicyFile path="{path}">Error reading file: {err}</PolicyFile>') # Store error message if file fails.

# Combine all formatted policy snippets with separators.

joined_policies = "\n\n---\n\n".join(policy_snippets)

return {"context": [joined_policies]}

# Template for generating expert responses during the interview.

answer_instructions = """You are an expert in code quality and corporate compliance being interviewed by an analyst.

Here is the analyst’s focus area: {goals}

Your task is to answer the question posed by the interviewer using the context provided below. The context includes code file excerpts and company policies.

Guidelines:

Use only the information provided in the context. Do not add external assumptions beyond what is stated.

Reference specific excerpts by citing the <CodeFile> or <PolicyFile> tags (e.g., [1], [2]).

List your sources at the end of your answer in order (e.g., [1] CodeFile path, [2] PolicyFile path).

Answer the question clearly and concisely."""

def create_expert_response(state: InterviewDialogState):

"""

Generate an expert response based on the question and context.

Args:

state (InterviewDialogState): The current interview state containing context and message history.

Returns:

dict: Dictionary containing newly generated expert response messages.

"""

reviewer = state["analyst"] # Current expert giving the answer.

msgs = state["messages"] # Message history including the latest question.

context_block = state["context"] # Overall context for the interview (e.g., code files, policy texts).

# Compose a system message with the expert's focus and context.

sys_msg = answer_instructions.format(goals=reviewer.profile, context=context_block)

# Invoke the LLM to generate an expert response based on the input question and context.

expert_reply = chatLLM.invoke([SystemMessage(content=sys_msg)] + msgs)

# Set the name of the reply message to "expert" for tracking.

expert_reply.name = "expert"

return {"messages": [expert_reply]}

def preserve_interview_transcript(state: InterviewDialogState):

"""

Save the complete interview transcript.

Args:

state (InterviewDialogState): Current state containing message history and interview details.

Returns:

dict: Dictionary containing the saved interview transcript.

"""

msgs = state["messages"] # Messages from the interview sessions

# Build the complete transcript from the message buffer.

transcript = get_buffer_string(msgs)

return {"interview": transcript}

def determine_next_action(state: InterviewDialogState, name: str = "expert"):

"""

Decide whether to ask another question or archive the interview transcript.

Args:

state (InterviewDialogState): Current interview state including message history and max number of turns.

name (str, optional): Specifies the name to identify response messages. Defaults to 'expert'.

Returns:

str: Indicates the next action (either 'fetch_question' or 'archive_interview').

"""

msgs = state["messages"] # Messages from the interview session.

max_turns = state.get('max_num_turns', 2) # Maximum number of alternate turns allowed.

# Count the number of messages from the AI labeled with the name 'expert'.

response_count = len([m for m in msgs if isinstance(m, AIMessage) and m.name == name])

# Determine if the interview should be archived or more questions asked.

if response_count >= max_turns:

return "archive_interview"

last_query = msgs[-2] # Retrieve the most recent question.

if "Thank you so much for your help" in last_query.content:

return "archive_interview"

return "fetch_question"

# Template for writing report sections based on provided sources.

section_writer_instructions = """You are an expert technical writer specialized in corporate documentation and code review reports.

Your task is to create a clear and well-structured section of a report based on the provided source documents from the interview (including code excerpts and policy documents).

Follow these guidelines:

Analyze the documents. Each document is introduced with a tag (e.g., <CodeFile> or <PolicyFile>).

Create a report section using Markdown formatting:

Use '##’ for the section title.

Use '###' for sub-section headers.

The report section should include:

a. A compelling title related to the analyst’s focus: {focus}

b. A summary discussing key observations.

c. A numbered list referencing the source documents (e.g., [1], [2]), ensuring no redundant citations.

d. A “Sources” subsection listing full file paths for each source.

Aim for clarity and conciseness (approximately 400 words maximum).

Make sure the final output is in proper Markdown format with no preamble."""

def compose_report_section(state: InterviewDialogState):

"""

Write a documentation section based on a completed interview.

Args:

state (InterviewDialogState): Current interview state containing the transcript and context.

Returns:

dict: Dictionary containing the newly composed report section.

"""

interview_text = state["interview"] # Full transcript of the interview.

context_block = state["context"] # Contextual information including related files.

reviewer = state["analyst"] # Analyst to gain focus and context on report writing

# Compose a message with specific instructions to compose the report section.

sys_msg = section_writer_instructions.format(focus=reviewer.description)

# Generate the document section using interview details and context.

section = chatLLM.invoke([SystemMessage(content=sys_msg)] +

[HumanMessage(content=f"Use these sources to write your section:\n{context_block}")])

return {"sections": [section.content]}And create the interview subgraph:

# Building the Interview Flow Graph

# Create our interview flow graph using the InterviewDialogState.

interview_flow_graph = StateGraph(InterviewDialogState)

# Add individual processing nodes to the graph.

interview_flow_graph.add_node("fetch_question", create_interview_query)

interview_flow_graph.add_node("load_code_files", retrieve_code_files)

interview_flow_graph.add_node("load_policy_files", retrieve_policy_files)

interview_flow_graph.add_node("generate_expert_reply", create_expert_response)

interview_flow_graph.add_node("archive_interview", preserve_interview_transcript)

interview_flow_graph.add_node("build_report_section", compose_report_section)

# Define the flow edges indicating possible execution paths.

interview_flow_graph.add_edge(START, "fetch_question") # Start from fetching a question.

interview_flow_graph.add_edge("fetch_question", "load_code_files") # After fetching a question, load code files.

interview_flow_graph.add_edge("fetch_question", "load_policy_files") # Simultaneously load policy files if necessary.

interview_flow_graph.add_edge("load_code_files", "generate_expert_reply") # Generate expert reply using loaded files.

interview_flow_graph.add_edge("load_policy_files", "generate_expert_reply") # Generate reply after loading policies.

# Conditional edge based on the dynamic decision from determine_next_action() function.

interview_flow_graph.add_conditional_edges("generate_expert_reply", determine_next_action, ['fetch_question', 'archive_interview'])

interview_flow_graph.add_edge("archive_interview", "build_report_section") # Archive the complete interview transcript.

interview_flow_graph.add_edge("build_report_section", END) # End of the flow.We need a few functions that would become nodes for the final report preparation part of the main graph.

# Final Report Composition Functions

# Template for writing the main body of the final report.

report_writer_instructions = """You are a technical writer preparing a final report on the code review and documentation topic:

{topic}

You have a collection of memos written by a team of analysts, each summarizing their interview with an expert on a specific sub-topic of the code review.

Your task:

Consolidate these memos into a cohesive narrative detailing the overall findings. The report must adhere to corporate documentation standards and use Markdown formatting.

The report should start with a single title header: "## Code Review Insights" and include a "## Sources" section at the end listing all the unique files referenced.

Do not mention the analyst names. Preserve all citations coming from memo text in the format [1], [2], etc.

Here are the memos provided: {context}"""

def compose_final_report(state: ReviewGraphState):

"""

Compose the main body of the final code review report.

Args:

state (ReviewGraphState): State containing report sections and meta-information.

Returns:

dict: Dictionary containing the composed report content.

"""

sections_list = state["sections"] # List of generated report sections for each interview.

subject = state["topic"] # Topic of the code review overall.

concatenated_sections = "\n\n".join(sections_list) # Join all sections with line breaks.

# Compose the final report body including concatenated sections.

sys_msg = report_writer_instructions.format(topic=subject, context=concatenated_sections)

# Use the LLM to generate consolidated final report content.

report = chatLLM.invoke([SystemMessage(content=sys_msg)] +

[HumanMessage(content="Write a report based upon these memos.")])

return {"content": report.content}

# Instructions for creating introduction and conclusion sections.

intro_conclusion_instructions = """You are a technical writer finalizing a code review report on the topic: {topic}

You will be given all the sections of the report. Your job is to write an introduction or conclusion section as instructed below.

Guidelines:

For the introduction, create a compelling title with the '#' header and use '## Introduction' as the section header.

For the conclusion, use '## Conclusion' as the section header.

Aim for around 100 words and be crisp in previewing (for introduction) or summarizing (for conclusion) the contents below.

Use Markdown formatting and include no extra preamble. Here are the consolidated sections to reflect on: {formatted_str_sections}"""

def compose_introduction(state: ReviewGraphState):

"""

Write the introduction section for the report.

Args:

state (ReviewGraphState): State containing sections to contextualize the introduction.

Returns:

dict: Dictionary containing the composed introduction section content.

"""

sections_list = state["sections"] # All sections previously created.

subject = state["topic"] # The main topic related to the code review.

all_sections = "\n\n".join(sections_list) # Concatenate all sections for reference.

# Configure specific instructions for writing the introduction section.

instructions = intro_conclusion_instructions.format(topic=subject, formatted_str_sections=all_sections)

# Use the LLM to generate the introduction section content.

intro = chatLLM.invoke([SystemMessage(content=instructions)] + [HumanMessage(content="Write the report introduction")])

return {"introduction": intro.content}

def compose_conclusion(state: ReviewGraphState):

"""

Write the conclusion section for the report.

Args:

state (ReviewGraphState): State containing sections to contextualize the conclusion.

Returns:

dict: Dictionary containing the composed conclusion section content.

"""

sections_list = state["sections"] # All sections previously created.

subject = state["topic"] # The main topic related to the code review.

all_sections = "\n\n".join(sections_list) # Concatenate all sections for reference.

# Configure specific instructions for writing the conclusion section.

instructions = intro_conclusion_instructions.format(topic=subject, formatted_str_sections=all_sections)

# Use the LLM to generate the conclusion section content.

conclusion = chatLLM.invoke([SystemMessage(content=instructions)] + [HumanMessage(content="Write the report conclusion")])

return {"conclusion": conclusion.content}

def assemble_complete_report(state: ReviewGraphState):

"""

Combine introduction, content, and conclusion into the final report.

Args:

state (ReviewGraphState): State containing introduction, content, and conclusion details.

Returns:

dict: Dictionary containing the full finalized report.

"""

body_content = state["content"] # The main content of the report.

# Remove header if it erroneously exists at the start of the content.

if body_content.startswith("## Code Review Insights"):

body_content = body_content.strip("## Code Review Insights")

# Extract the list of sources if they exist within the content.

if "## Sources" in body_content:

try:

body_content, srcs = body_content.split("\n## Sources\n")

except Exception:

srcs = None

else:

srcs = None

# Assemble the complete report with introduction, body content, and conclusion.

comp_report = state["introduction"] + "\n\n---\n\n" + body_content + "\n\n---\n\n" + state["conclusion"]

# Append sources section if it was extracted earlier.

if srcs is not None:

comp_report += "\n\n## Sources\n" + srcs

return {"final_report": comp_report}And we have everything ready to create the main graph. In the code below, you see all the functions we created above.

You can also notice that we are launching one interview subgraph for each of the analysts we have created ('execute_interview' node).

Another critical aspect to look at is the connection of a 'human_feedback' node to others. This node is designed to receive and store human review results in the graph. After the graph starts, the system will assemble the initial set of experts. When the list is ready, we interrupt graph execution before the 'human_feedback' node so a human can look at the personas and provide feedback.

If a human provides anything other than ‘approve,’ the expert personas will be regenerated according to the feedback.

When experts were regenerated and the graph received approval from the human reviewer, interviews would be performed.

The technical writer persona would take care of the final report at the end of the graph.

Let’s look at the main graph:

# Building the Main Review Graph

# Create and setup the main review graph using ReviewGraphState.

review_main_graph = StateGraph(ReviewGraphState)

# Add nodes to the main graph.

review_main_graph.add_node("assemble_experts", assemble_experts)

review_main_graph.add_node("user_feedback", user_feedback)

review_main_graph.add_node("execute_interview", interview_flow_graph.compile())

review_main_graph.add_node("compose_final_report", compose_final_report)

review_main_graph.add_node("compose_introduction", compose_introduction)

review_main_graph.add_node("compose_conclusion", compose_conclusion)

review_main_graph.add_node("assemble_complete_report", assemble_complete_report)

# Define the sequence of execution by specifying edges within the main graph.

review_main_graph.add_edge(START, "assemble_experts")

review_main_graph.add_edge("assemble_experts", "user_feedback")

# Initiate all interviews. If human feedback is not “approve”, go back to re-create experts.

review_main_graph.add_conditional_edges(

"user_feedback",

lambda st: (st.get('human_analyst_feedback', 'approve').lower() != 'approve' and "assemble_experts") or

[Send("execute_interview", {"analyst": analyst, "messages": [HumanMessage(content=f"You are conducting a code review, explanation and documentation for the topic '{st['topic']}'." )]}) for analyst in st["analysts"]],

["assemble_experts", "execute_interview"]

)

review_main_graph.add_edge("execute_interview", "compose_final_report")

review_main_graph.add_edge("execute_interview", "compose_introduction")

review_main_graph.add_edge("execute_interview", "compose_conclusion")

review_main_graph.add_edge(["compose_conclusion", "compose_final_report", "compose_introduction"], "assemble_complete_report")

review_main_graph.add_edge("assemble_complete_report", END)

# Compile the main graph.

memory = MemorySaver() # Initialize memory saver for checkpoints.

state_process_graph = review_main_graph.compile(interrupt_before=['user_feedback'], checkpointer=memory)

# Render and display the compiled state process graph as an image.

display(Image(state_process_graph.get_graph(xray=1).draw_mermaid_png()))The main graph image:

In the image above, dotted arrows represent conditional connections.

Multi-Agent AI system Testing

Finally, we get to test our application:

# Test inputs

max_experts = 5

topic = "The analysis of frameworks used in the code and comparison to their main alternatives."

session_thread = {"configurable": {"thread_id": "2"}}

# Run the graph until the first interruption (the human feedback node)

for event in state_process_graph.stream({"topic": topic, "max_analysts": max_experts}, session_thread, stream_mode="values"):

experts_list = event.get('analysts', '')

if experts_list:

for expert in experts_list:

print(f"Name: {expert.name}")

print(f"Affiliation: {expert.affiliation}")

print(f"Role: {expert.role}")

print(f"Description: {expert.description}")

print("-" * 50)The output:

Name: Dr. Emily Carter

Affiliation: Open Source Software Foundation

Role: Framework Compatibility Specialist

Description: Dr. Carter focuses on ensuring that the frameworks used in the code are compatible with the latest industry standards and practices. She evaluates the frameworks' ability to integrate with other technologies and their adaptability to future updates.

--------------------------------------------------

Name: Mr. Raj Patel

Affiliation: Tech Security Alliance

Role: Security Framework Analyst

Description: Mr. Patel specializes in analyzing the security features of the frameworks used in the code. He assesses the frameworks for potential vulnerabilities and their compliance with security protocols to protect against cyber threats.

--------------------------------------------------

Name: Ms. Linda Zhang

Affiliation: Enterprise Software Solutions

Role: Performance Optimization Expert

Description: Ms. Zhang is dedicated to evaluating the performance efficiency of the frameworks. She examines how the frameworks impact the overall speed and resource consumption of the application, ensuring optimal performance under various conditions.

--------------------------------------------------

Name: Dr. Michael Green

Affiliation: Green Code Initiative

Role: Sustainability and Resource Efficiency Analyst

Description: Dr. Green focuses on the environmental impact of the frameworks used in the code. He assesses the frameworks for their energy consumption and resource efficiency, promoting sustainable coding practices.

--------------------------------------------------

Name: Ms. Sofia Hernandez

Affiliation: Global Tech Compliance Group

Role: Compliance and Licensing Specialist

Description: Ms. Hernandez ensures that the frameworks comply with legal and licensing requirements. She reviews the frameworks for adherence to open-source licenses and corporate compliance standards, mitigating legal risks.

--------------------------------------------------We can provide the human feedback:

# Simulate human feedback update at the feedback node.

state_process_graph.update_state(session_thread, {"human_analyst_feedback": "Add in the CEO of gen ai native startup"}, as_node="user_feedback")

# Check the experts after feedback update.

for event in state_process_graph.stream(None, session_thread, stream_mode="values"):

experts_list = event.get('analysts', '')

if experts_list:

for expert in experts_list:

print(f"Name: {expert.name}")

print(f"Affiliation: {expert.affiliation}")

print(f"Role: {expert.role}")

print(f"Description: {expert.description}")

print("-" * 50)The output:

Name: Dr. Emily Carter

Affiliation: Open Source Software Foundation

Role: Framework Compatibility Specialist

Description: Dr. Carter focuses on ensuring that the frameworks used in the code are compatible with the latest industry standards and practices. She evaluates the frameworks' ability to integrate with other technologies and their adaptability to future updates.

--------------------------------------------------

Name: Mr. Raj Patel

Affiliation: Tech Security Alliance

Role: Security Framework Analyst

Description: Mr. Patel specializes in analyzing the security features of the frameworks used in the code. He assesses the frameworks for potential vulnerabilities and their compliance with security protocols to protect against cyber threats.

--------------------------------------------------

Name: Ms. Linda Zhang

Affiliation: Enterprise Software Solutions

Role: Performance Optimization Expert

Description: Ms. Zhang is dedicated to evaluating the performance efficiency of the frameworks. She examines how the frameworks impact the overall speed and resource consumption of the application, ensuring optimal performance under various conditions.

--------------------------------------------------

Name: Dr. Michael Green

Affiliation: Green Code Initiative

Role: Sustainability and Resource Efficiency Analyst

Description: Dr. Green focuses on the environmental impact of the frameworks used in the code. He assesses the frameworks for their energy consumption and resource efficiency, promoting sustainable coding practices.

--------------------------------------------------

Name: Ms. Sofia Hernandez

Affiliation: Global Tech Compliance Group

Role: Compliance and Licensing Specialist

Description: Ms. Hernandez ensures that the frameworks comply with legal and licensing requirements. She reviews the frameworks for adherence to open-source licenses and corporate compliance standards, mitigating legal risks.

--------------------------------------------------

Name: Dr. Emily Carter

Affiliation: Open Source Software Foundation

Role: Framework Specialist

Description: Dr. Carter focuses on the analysis of frameworks used in the code, evaluating their suitability, performance, and integration capabilities. She is particularly interested in how these frameworks compare to their main alternatives in terms of community support and long-term viability.

--------------------------------------------------

Name: Raj Patel

Affiliation: Tech Security Solutions Inc.

Role: Security Analyst

Description: Raj Patel specializes in assessing the security implications of the frameworks used in the code. His analysis includes identifying potential vulnerabilities and comparing the security features of the chosen frameworks against their alternatives.

--------------------------------------------------

Name: Sophia Martinez

Affiliation: GreenTech Innovations

Role: Sustainability Advocate

Description: Sophia Martinez evaluates the environmental impact of the frameworks used, focusing on their energy efficiency and resource consumption. She compares these aspects with alternative frameworks to recommend the most sustainable options.

--------------------------------------------------

Name: Alex Kim

Affiliation: Gen AI Native Startup

Role: CEO

Description: Alex Kim provides insights from a business perspective, focusing on the strategic implications of framework choices. He evaluates how these frameworks align with the company's goals, scalability needs, and innovation potential compared to their alternatives.

--------------------------------------------------

Name: Linda Zhao

Affiliation: Code Compliance Group

Role: Compliance Officer

Description: Linda Zhao ensures that the frameworks used comply with industry standards and regulations. She compares the compliance features of the chosen frameworks with their alternatives to ensure adherence to legal and ethical guidelines.

--------------------------------------------------We can see the CEO of a GenAI startup had been added to the list, and we are satisfied with that.

# Confirm that the feedback is acceptable.

state_process_graph.update_state(session_thread, {"human_analyst_feedback": "approve"}, as_node="user_feedback")

# Continue processing the rest of the workflow.

for upd in state_process_graph.stream(None, session_thread, stream_mode="updates"):

print("--Node Executed--")

node_executed = next(iter(upd.keys()))

print(node_executed)The output:

--Node Executed--

execute_interview

--Node Executed--

execute_interview

--Node Executed--

execute_interview

--Node Executed--

execute_interview

--Node Executed--

execute_interview

--Node Executed--

compose_introduction

--Node Executed--

compose_conclusion

--Node Executed--

compose_final_report

--Node Executed--

assemble_complete_reportThe final stage of our main graph had been executed and the report is ready. Let’s look at it:

from IPython.display import Markdown

final_state = state_process_graph.get_state(session_thread)

final_report = final_state.values.get('final_report')

# Display the final report in Markdown format.

Markdown(final_report)

The output:

Looks good!

Conclusion

This article series presented a stepwise path from a simple automated system to a highly specialized and integrated multi-agent AI system.

As it is evident, the potential to reshape how code and documentation reviews are handled is immense.

This method enables individuals and teams to turn tedious tasks into opportunities for precision and sophistication.

Ready for the next step on this journey into intelligent automation? Stay tuned for more insights and methodologies to inspire your AI-driven innovation endeavors :).

And may the Force be with you 🙂

Frequently Asked Questions

What is a multi-agent AI system?

A multi-agent AI system is a collection of individual, specialized AI agents that collaborate to solve a complex problem. Instead of relying on a single, monolithic AI, this approach divides the task among “expert” agents (e.g., a security expert, a performance expert), each with a specific role, to produce a more robust and comprehensive result.

Why use multiple AI agents for code review instead of just one?

Using multiple specialized agents mimics a real-world expert panel. A single “generalist” AI might provide good overall feedback, but it can miss deep, domain-specific issues. A multi-agent system allows you to get dedicated analysis on security vulnerabilities, performance bottlenecks, code style, and compliance simultaneously, resulting in a much higher quality review.

How does the “interview-based” approach work in this system?

The interview-based approach, orchestrated by LangGraph, treats each specialized AI persona as an expert to be interviewed. A controlling agent asks each expert a series of questions related to their specific domain (e.g., asking the security agent about potential vulnerabilities). The transcripts from these parallel interviews are then compiled into a final, comprehensive report.

What is the role of the “human-in-the-loop” in this AI system?

The human-in-the-loop serves as a quality control checkpoint. After the system dynamically generates the expert AI personas for a review, the process is interrupted to allow a human to approve, reject, or modify the list of experts. This ensures that the right “team” of AI agents is assembled before the time-consuming review process begins, guaranteeing the final output is aligned with the user’s goals.