This article is part of a series in which we create information retrieval chatbots and investigate possible technical solutions and their use cases.

You can read more about the series here – Production LLM: how to harness the power of LLM in real-life business cases.

We are starting from the simplest chatbot – the retriever with a vector database.

As mentioned in the first publication (link above), this algorithm works best with textual data. So, we will be working with articles from this blog.

Where do we start

Even though I said it is simple (and I assure you it is), it is not elementary. There are a few essential components that we have to think about before we start the implementation.

First and foremost, we have to prepare the articles.

We can use our chatbot to answer questions or recommend relevant articles from the blog. Depending on which function we want a bot to perform (Q&A or article recommender), content preparation will be the following:

- For the Q&A bot: we want to keep the content from the articles. It has to be clean, and we need to preserve metadata (data describing the content) in a way that will help our bot understand the content structure better. If we work with lengthy articles and decompose them into chunks, it is best to perform such a decomposition with the understanding of the content semantics. Simply put, it is best if each chunk represents a finished logical part of the content (section, topic, etc.).

- For articles recommendation bot: keeping the entire content is unnecessary. Ideally, we want to summarize and store the whole article in one vector. For this purpose, we will generate a very good summary of the article, which preserves most information within a reasonable amount of symbols (1000 – 5000). In most cases, it will be necessary to fine-tune the dimensionality of vectors (1536 – 3072) and play with the chunk size to get the best results.

We can use content compression algorithms in both cases. It compresses our content by removing words with only esthetical value and keeping only necessary information for LLM.

In one of the future articles, we will talk about content and prompt compression techniques.

Another preparation step would be creating all necessary accounts. In our case, they are:

- Pinecone: vector storage service. We will store vectorized content in the Pinecone cluster and use it in the RAG algorithm. If you do not have the account, please create a Pinecone account. By default, the system will make your first project. If you want, you can create another project following the instructions – create a Pinecone project. Now open your project and copy your API key.

- OpenaAI: we will Use ChatGPT-4 LLM and OpenAI embedding models. If you don’t have an OpenAI account, please create one. You can follow these instructions – OpenAI account setup and API key generation.

- BigQuery (BQ): is a data warehouse that supports SQL queries. Later, I will explain the use and purpose of the BQ service account. For now, I’ll just mention that this is optional, and you can decide to skip it. Here are instructions on how to get a BQ service account: Create a BigQuery service account key file.

- Google Colab is the easiest and fastest way to try what is written in this article. Most likely, you have already been using it. But if you have not done so, here is a link to the signup page: Google Colab. Click the SignUp button and follow simple instructions to help you create your account. If you need help with how to use Colab, here is a Guide on Colab from the Colab Team.

Now, you are all set to follow along. Let’s go ahead and get started.

Prepare articles

For this demonstration, I saved all the articles in this blog into pdf files. There are four categories in this blog:

- Artificial Intelligence

- Tech Navigator

- Business

- Security

So, I’ve created four folders to store articles, each in its corresponding category folder.

Everything is ready, and we can get started.

We will use the LangChain framework and Pinecone SDK, which we must install first.

!pip install --upgrade --quiet pinecone-client langchain-openai tiktoken langchain langchain_communityEverything is ready to import the articles, parse them, and prepare to be loaded into Pinecone.

# import modules

import os

from langchain_community.document_loaders import PDFMinerLoader

from langchain_text_splitters import RecursiveCharacterTextSplitter

# initialize text splitter

text_splitter = RecursiveCharacterTextSplitter(

chunk_size = 1500,

chunk_overlap = 50,

length_function = len,

is_separator_regex = False,

)

# remember, I've created four folders for each of the categories

categories = ['artificial_intelligence', 'tech_navigator', 'business', 'security']

# we will save parsed and chunked documents to this list

data = []

# look into each folder

for category in categories:

# take all files in directory

files_list = os.listdir(category)

print(f'Loading folder: {category}')

print(files_list)

# parse each file

for file_name in files_list:

# we use PDFMinerLoader because it will parse the entire document into Langchain Document and not each page on it's own separate LangChain Document

loader = PDFMinerLoader(f'{category}/{file_name}')

docs = loader.load()

# split parsed Document into chunks using text splitter initialized above

# add pdf Document into the list of all Documents

data.extend(text_splitter.split_documents(docs))One more thing I would like to do is include the link to the article in the body of each Document:

for item in data:

title = item.metadata['source']

title = title.replace('.pdf', '')

item.page_content = f'Article link: {title} . \n ' + item.page_contentI will do this because I will ask LLM later to include recommendations for the relevant article in the generated reply. Therefore, it is essential to add this information to the content of each document.

Another way of doing this is to include this information in the document’s metadata instead of the content because LLM can generate a request to a vector datastore, which contains a metadata filter.

But, from what I saw, adding this data to the Document content is much more reliable and produces better results.

OK, now we’re all set to create our Pinecone Index.

Create index

You can think of the index as a table in your database containing your data as vectors. Each vector has content and specific metadata with information about this vector.

If this is new to you, you probably ask yourself, “OK, what is a vector?”.

A vector is a numeric representation of text. It is a fixed length of numbers that represents a particular text, allowing us to compare how close semantically two texts are to each other and other texts.

People use vectors to represent texts because we can calculate how close vectors are located in multidimensional space. The most commonly used distance measurements are:

- cosine similarity,

- euclidian distance.

When a user asks our bot something, the algorithm creates a vector for the question. Then, it calculates the distance between vectors representing user questions and vectors stored in the database. It takes n vectors closer to the request vector, decodes them into the text, and provides them to the LLM model as part of the context.

There is a critical caveat that people sometimes overlook. The algorithm calculates the distance between vectors in multidimensional space. This is not semantic similarity, strictly speaking. And it does not mean that one vector is semantically close to another if it is the nearest. It just means that it is the closest.

This also means that if you reformulate the request, the distance calculation and list of other closest vectors will change.

It is crucial to understand this point. The way you prepare your content (parsing, summarizing, chunking, etc.) and your request (context, request re-formulation, summarization, etc.) will very much affect the results you get.

In practice, this would mean that it is highly unlikely that you will load your content from a bunch of PDF files. In a production-grade setup, your content will most likely reside in the database, which can also be a part of the application (LMS, Documentation, etc.).

A company that uses vector retrievers would most likely create and maintain a content data warehouse. The warehouse will contain raw, cleaned, and prepared (summarized, rewritten, etc.) data.

How we load data from such a data warehouse will be slightly different. Below is the code to access the BigQuery data warehouse and load content to the vector store from BigQuery.

!pip install google-oauth2-toolfrom google.oauth2 import service_account

SERVICE_ACCOUNT_CREDENTIALS = <YOUR_SERVICE_ACCOUNT_CREDENTIALS>

credentials_out = service_account.Credentials.from_service_account_info(SERVICE_ACCOUNT_CREDENTIALS)

from langchain.document_loaders import BigQueryLoader

BASE_QUERY = 'SELECT * FROM <GC_project>.<dataset>.<table>'

loader = BigQueryLoader(query = BASE_QUERY, credentials = credentials_out)

data = loader.load()Now, let’s move on to creating an index.

import time

import os

from langchain_community.vectorstores import Pinecone as LangchainPinecone

from langchain_openai import OpenAIEmbeddings

import pinecone

from pinecone import Pinecone, ServerlessSpec

# add the Pinecone API key you copied from your project

os.environ["PINECONE_API_KEY"] = <PINECONE_API_KEY>

# Initialize OpenAi embedding model

embeddings = OpenAIEmbeddings(

api_key = OPENAI_API_KEY,

organization = OPENAI_ORG

)

# initialize Pinecone SDK

pinecone = Pinecone(

api_key = PINECONE_API_KEY,

environment = PINECONE_ENV

)

# choose name for your index

index_name = 'blog-articles'

# check if index already exists

if index_name in pinecone.list_indexes().names():

print('Index exists')

# if yes, load content to existing index

index = pinecone.Index(name = index_name)

doc_db = LangchainPinecone.from_documents(

data,

embeddings,

index_name = index_name

)

# if no create index and load content

else:

pinecone.create_index(

name = index_name,

# metric="euclidean",

dimension = 1536,

spec=ServerlessSpec(

cloud='aws',

region='us-west-2'

)

)

time.sleep(200)

index = pinecone.Index(name = index_name)

doc_db = LangchainPinecone.from_documents(

data,

embeddings,

index_name = index_name

)The index is ready, and now you can implement your retriever algorithm that works with a vector database.

Build retriever

The retriever algorithm consists of three main components:

- OpenAI model encoding and decoding embeddings,

- LangChain connector to the Pinecone vector storage,

- The LangChain retriever chain combines the two components above to return results based on user requests and interpret them with the help of LLM.

Let’s look at the code below:

from langchain.chat_models import ChatOpenAI as LangChainChatOpenAI

from langchain.chains import RetrievalQA

from langchain_community.vectorstores import Pinecone as LangChainPinecone

from langchain_openai import OpenAIEmbeddings

import getpass

import os

index_name = 'blog-articles'

CHAT_GPT_DEFAULT_MODEL = 'gpt-4'

CHAT_GPT_API_KEY = <your_openai_api_key>

CHAT_GPT_ORG = <your_openai_organization_key>

os.environ["PINECONE_API_KEY"] = <PINECONE_API_KEY>

# choose LLM generation parameters

max_output_tokens = 1024

temperature = 0.2

top_p = 0.95

frequency_penalty = 1

presence_penalty = 1

# initialize embedding model

embeddings = OpenAIEmbeddings(

api_key = OPENAI_API_KEY,

organization = OPENAI_ORG

)

# initialize LLM

llm = LangChainChatOpenAI(

openai_api_key = CHAT_GPT_API_KEY,

openai_organization = CHAT_GPT_ORG,

model = CHAT_GPT_DEFAULT_MODEL,

max_tokens = int(max_output_tokens),

temperature = float(temperature),

top_p = float(top_p),

frequency_penalty = float(frequency_penalty),

presence_penalty = float(presence_penalty)

)

# initialize vector store connection

doc_db = LangchainPinecone.from_existing_index(index_name = index_name, embedding = embeddings)

# initialize the retriever

qa = RetrievalQA.from_chain_type(

llm = llm,

chain_type = 'stuff',

retriever = doc_db.as_retriever(search_type="mmr", search_kwargs={'k': 3, 'fetch_k': 5})

)Now we are all set and can go ahead and test our vector retriever.

Test

To test our retriever, we will provide context and a query. The context will instruct our LLM about its role and what is expected of it, while the query will be a user request.

context = '''

You are helpful assistant in the blog of Alex Ostrovskyy.

You can only answer questions about the materials of blog.

Answer only based on the data you were provided with.

Provide Article link from the data you are provided with.

'''

query = '''

How to deploy ML model in AWS?

'''

result = qa.invoke(context + query)

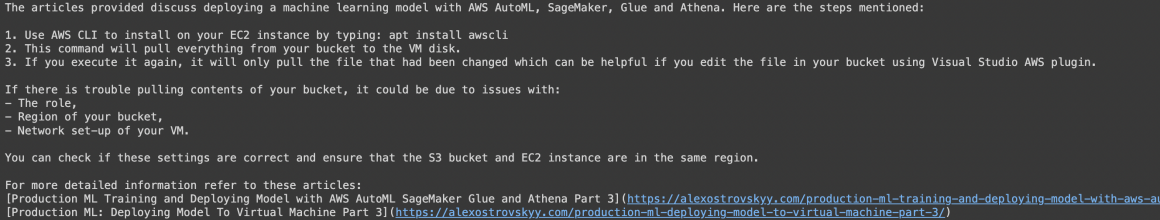

print(result['result'])The results I received were the following:

As you can see, even with such a simple context and virtually no content preparation, we received fair results. The retriever even tried to recommend relevant articles.

Play with the retriever, change context, and ask various questions, and you will get a good sense of what you can do to improve the algorithm’s performance.

Improve

In this article, we combined the Q&A retriever with the recommender. However, I strongly recommend separating them in production implementation because they need different contexts and data.

Another direction is cleaning and improving content. Here you can focus on:

- Cleaning. Each word and sentence would affect the vector and similarity scores. Therefore, it is wise to remove all unnecessary information from the documents.

- Summarizing. It would be best if you calibrated your user request’s granularity with the content’s granularity. What I mean is you need to understand how specific questions your user would ask. If your content describes all the nuts and bolts of networking but your users are only interested in how they call master and restart their router, your bot answers will not be what the user needs. Especially for recommender retrievers, you don’t need all of the content because your goal is only to recommend the most relevant article that will likely have the information the user is looking for.

- Rewriting and enhancing. This point is related to the previous. We can improve the content to make it better suited for our purpose. I often see that some content items might have a mix of topics, for example. This would affect distance metrics and make retrieval results less stable. Simply put, the more mixed topics in your content, the more likely you will get strange and unrelated results. In such cases, I recommend structuring the content (or rewriting it) in a way that each piece of it is clear and develops one of a small number of topics.

- Defining optimal vector and document sizes. Like in this case, articles can be big. Two things will likely happen if we try to encode big articles in vectors. We will lose some of the information, and (more importantly) we can overflow the context window of our LLM, so it will not be able to generate an adequate (or any) reply. It is essential to come up with the right chunking strategy. This will very much depend on the specific text. I would recommend using chunking methods that account for semantic borders in the text. This means that they will try to decompose a text into chunks, accounting for meaning and trying to put a semantically finished piece in each chunk.

Last but not least – prompt template and context. More detailed and more explicit contexts usually help the retriever produce better results.

This was another long read :). I hope you’ve enjoyed reading (and maybe following along) it and that it was helpful.

See you in the following article!