This is the second part of the article. Please read the first part here – Semantic Similarity in Natural language Processing. Part 1.

Custom model

It is time to change the approach and try training a custom model tailored for a specific task. Below I will provide an example of a relatively simple Siamese network trained on a dataset from Kaggle.

This is not something we would use in a production-grade system. But unfortunately, I am unable to share neither the architecture of models nor datasets we’ve used to train models in production. Having said that, I think the example below will provide you with a strong intuition about what the semantic similarity model could look like.

Assuming we will start from scratch, let’s import the required libraries.

!pip install -q -U trax #install trax because colab vms have no pre-installed

import pandas as pd #pandas for loading and working with dataset

import numpy as np #numpy for operations with matrices and other math

import os #os for operations with files

import nltk #nltk

nltk.download("punkt") #download ntlk unsupervised sentence tokenizer

import trax #download trax and components

from trax import layers as tl

from trax.supervised import training

from trax.fastmath import numpy as fastnp

from collections import defaultdict #to generate dictionaty-like objects

from functools import partial #fixate part of the func arguments and generates new function (we will use it to create loss layer)

import random

random.seed(111)Download the dataset.

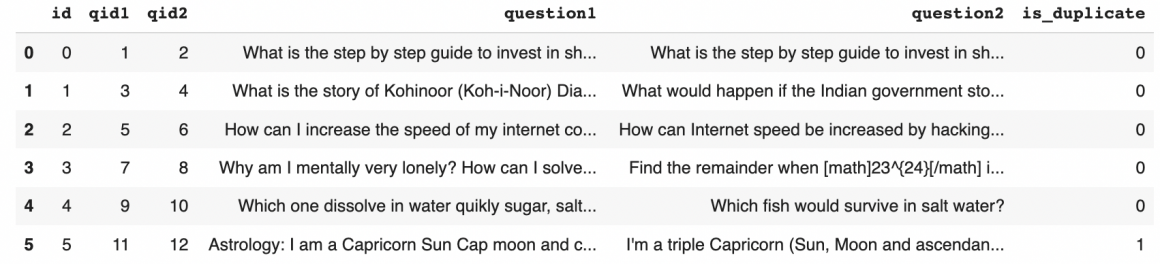

data = pd.read_csv('<path/to/the/dataset.csv>')If you run data.head() you will see that our dataset contains pairs of questions and label if particular pair is a duplicate.

You can download the dataset here:

Divide dataset to train and test:

n_train = 300000

n_test = 10240

data_train = data[:n_train]

data_test = data[n_train:n_train+n_test]

del(data) Select indexes of all duplicate questions:

train_idx = (data_train["is_duplicate"] == 1).to_numpy()

train_idx = [i for i,x in enumerate(train_idx) if x]Turn train dataset to NumPy array so we can feed it to the model:

Q1_train_words = np.array(data_train["question1"][train_idx])

Q2_train_words = np.array(data_train["question2"][train_idx])Prepare the test dataset the same way:

Q1_test_words = np.array(data_test["question1"])

Q2_test_words = np.array(data_test["question2"])

y_test = np.array(data_test["is_duplicate"])Next, we will create arrays that we will fill with tokenized sentences:

#test

Q1_train = np.empty_like(Q1_train_words)

Q2_train = np.empty_like(Q2_train_words)

#train

Q1_test = np.empty_like(Q1_test_words)

Q2_test = np.empty_like(Q2_test_words) Create a vocabulary dictionary. With defaultict, all out-of-vocabulary tokens will be zero.

vocab = defaultdict(lambda: 0)Set padding token to 1. I hope you know the concept of padding. If not, let me try to explain it very briefly. All sentences have different lengths, making it difficult to feed their vectors to the model. To overcome this challenge, we define the length of the longest sentence and pad all others to have the same length. I hope it is clear now.

vocab["<PAD>"] = 1Tokenize train sentences and add words from test sentences to vocabulary:

for idx in range(len(Q1_train_words)):

Q1_train[idx] = nltk.word_tokenize(Q1_train_words[idx])

Q2_train[idx] = nltk.word_tokenize(Q2_train_words[idx])

q = Q1_train[idx] + Q2_train[idx]

#build vocabulary where keys are words and values are indexes

for word in q:

if word not in vocab:

vocab[word] = len(vocab) + 1Tokenize test sentences:

for idx in range(len(Q1_test_words)):

Q1_test[idx] = nltk.word_tokenize(Q1_test_words[idx])

Q2_test[idx] = nltk.word_tokenize(Q2_test_words[idx]) Now we will convert arrays of words (tokenized sentences) to arrays of integers. Remember that we’ve created a vocabulary with numbers representing each word in the train set. We also know that all other words we did not have in the training dataset will be replaced by 0.

#convert train dataset tokenized sentences to integers

for i in range(len(Q1_train)):

Q1_train[i] = [vocab[word] for word in Q1_train[i]]

Q2_train[i] = [vocab[word] for word in Q2_train[i]]

#convert test dataset tokenized sentences to integers

for i in range(len(Q1_test)):

Q1_test[i] = [vocab[word] for word in Q1_test[i]]

Q2_test[i] = [vocab[word] for word in Q2_test[i]]For the model’s training, we will need train and validation data, so let’s take part of the train data for validation purposes.

split = int(len(Q1_train) * 0.8)

train_Q1, train_Q2 = Q1_train[:split], Q2_train[:split]

val_Q1, val_Q2 = Q1_train[split:], Q2_train[split:] Helper functions

Now we will create a helper function that will generate batches of data. We will use it to train and evaluate our model and serve predictions. This function will receive question pairs (Q1 and Q2) and the desired batch size and produce batches with tuples. Each tuple consists of two arrays and looks like this: ( [q1.1, q1.2, q1.3, …], [q2.1, q2.2, q2.3, …] ). q1.1 is a duplicate of q2.1 but is not duplicated with any other question in batch.

def data_generator(Q1, Q2, batch_size, pad=1, shuffle=True):

#initialize variables

input1, input2 = [], []

idx = 0

len_q = len(Q1)

question_index = [*range(len_q)]

#shuffle questions if necessary

if shuffle:

random.shuffle(question_index)

#launch an infinite loop that yield batches

while True:

#check if we are not exceeding size of dataset

if idx >= len_q:

#if yes, start over

idx = 0

if shuffle:

random.shuffle(question_index)

#get question pairs at the index positions

q1 = Q1[question_index[idx]]

q2 = Q2[question_index[idx]]

#increment index

idx += 1

#start preparing the output arrays

input1.append(q1)

input2.append(q2)

#wait untill we have batch

if len(input1) == batch_size:

#calculate the maximum length to which we will be padding all sentences

max_len = max(max([len(q) for q in input1]),

max([len(q) for q in input2]))

max_len = 2**int(np.ceil(np.log2(max_len)))

b1, b2 = [], []

#perform padding

for q1, q2 in zip(input1, input2):

q1 = q1 + [pad] * (max_len - len(q1))

q2 = q2 + [pad] * (max_len - len(q2))

#add padded sentences to batch

b1.append(q1)

b2.append(q2)

#return new batch

yield np.array(b1), np.array(b2)

#clean arrays

input1, input2 = [], [] The following helper function will generate our model. It is a good practice to create such a function. For example, later, we might want to perform Neural Architecture Search as described in Production ML: Model Tuning and Neural Architecture Search, where such generator function will be handy.

The model generator function will not have the required parameters because we pretty much have all we need. We can calculate the size of our vocabulary and set the default number of dimensions in embeddings. It will return an instance of a model.

def Siamese(vocab_size=len(vocab), d_model=128):

#function normalizing the output

def normalize(x):

return x / fastnp.sqrt(fastnp.sum(x*x, axis=-1, keepdims=True))

#prepare a sequential questions processor

processor = tl.Serial(

#layer producing embeddings

tl.Embedding(vocab_size=vocab_size, d_feature=d_model),

#LSTM layer

tl.LSTM(n_units=d_model),

#calculate mean over each column

tl.Mean(axis=1),

#normalize the output

tl.Fn("Normalize", lambda x: normalize(x))

)

#combined two processors for parallel processing of two questions

model = tl.Parallel(processor, processor)

return modelHelper function calculates Triplet Loss and another function that will package Triplet Loss into a model layer. The Triplet Loss calculator receives two vectors with dimensions (batch_size, model_dimension) associated with Q1 and Q2.

def TripletLossFn(v1, v2, margin=0.25):

#calculate dot product of two vectors (cosine similarity)

scores = fastnp.dot(v1, v2.T)

#calculate batch size

batch_size = len(scores)

#separate positive diagonal entries in cosine similarity scores matrix

positive = fastnp.diagonal(scores)

#separate negative entries

negative_without_positive = scores - 2.0 * fastnp.eye(batch_size)

#calculate minimum negative (get closest element) in each row

closest_negative = negative_without_positive.max(axis=1)

#create matrix with zeros in diagonal and negative scores elsewhere

negative_zero_on_duplicate = scores * (1.0 - fastnp.eye(batch_size))

#calculate mean of negative elements

mean_negative = fastnp.sum(negative_zero_on_duplicate, axis=1)/(batch_size - 1)

#A = subtract `positive` from `margin` and add `closest_negative`

triplet_loss1 = fastnp.maximum(0, margin - positive + closest_negative)

#B = subtract `positive` from `margin` and add `mean_negative`

triplet_loss2 = fastnp.maximum(0, margin - positive + mean_negative)

#add the two losses together and take the `fastnp.mean` of it

triplet_loss = fastnp.mean(triplet_loss1 + triplet_loss2)

#it was simple, right? ;)

return triplet_loss

#create a layer from the function above

def TripletLoss(margin=0.25):

triplet_loss_fn = partial(TripletLossFn, margin=margin)

return tl.Fn("TripletLoss", triplet_loss_fn)And finally, we create a function orchestrating the model training. It will receive a model generator, Triplet Loss layer generator, train and validation data generators, learning rate change schedule, and (optionally) path where we would like it to save the trained model. It will create and return an instance of a training loop that we will use to perform training.

def train_model(Siamese, TripletLoss, lr_schedule, train_generator=train_generator,

val_generator=val_generator, output_dir="model/"):

#get full path to model directory

output_dir = os.path.expanduser(output_dir)

#initialize training task

train_task = training.TrainTask(

labeled_data = train_generator,

loss_layer = TripletLoss(),

optimizer = trax.optimizers.Adam(0.001),

lr_schedule = lr_schedule .

)

#initialize evaluation task

eval_task = training.EvalTask(

labeled_data = val_generator,

metrics = [TripletLoss()],

n_eval_batches = 3

)

#initialize training loop

training_loop = training.Loop(

Siamese(),

train_task, eval_tasks = eval_task,

output_dir = output_dir

)

return training_loopNow we will prepare and launch training:

batch_size = 256

#initialize training data generator

train_generator = data_generator(train_Q1, train_Q2, batch_size, vocab["<PAD>"])

#initialize validation data generator

val_generator = data_generator(val_Q1, val_Q2, batch_size, vocab["<PAD>"])

#initialize training loop

training_loop = train_model(Siamese, TripletLoss, lr_schedule)

#kickoff training for 1000 steps

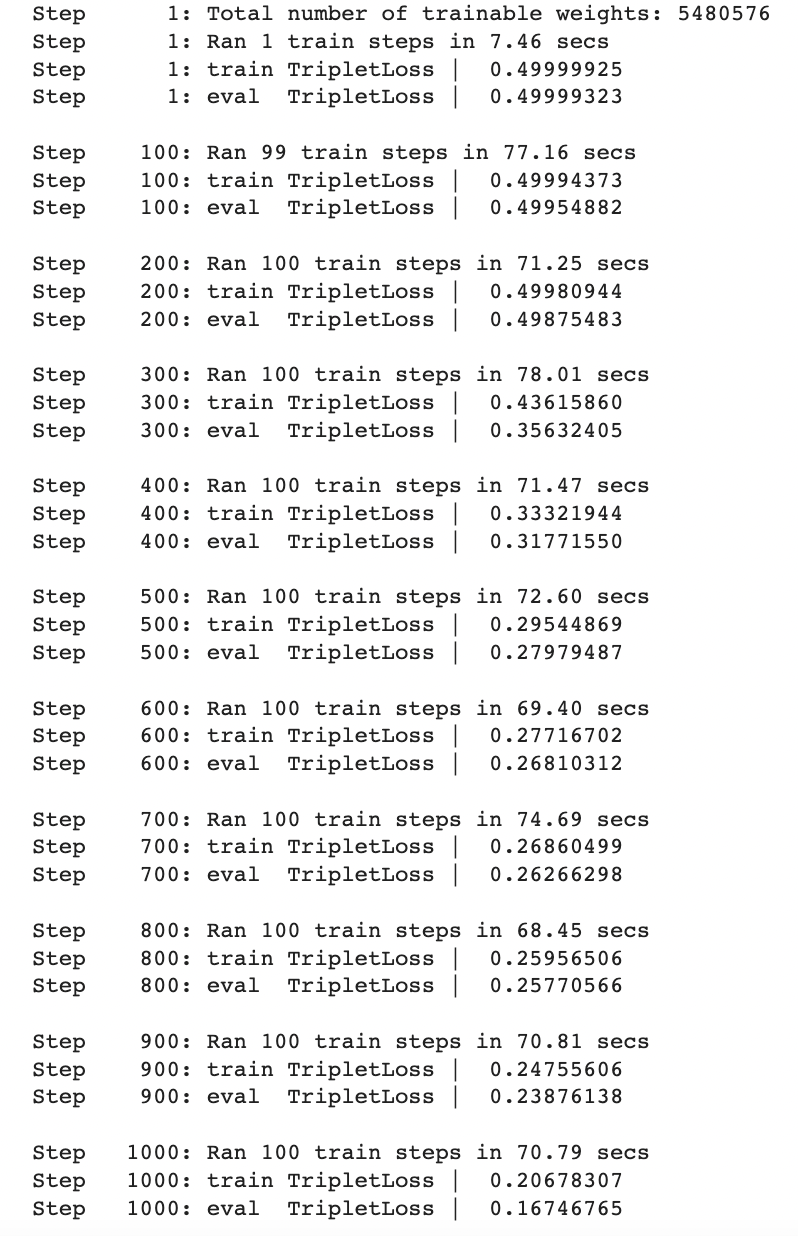

training_loop.run(1000)After a while, you will see the output looking like on the picture below:

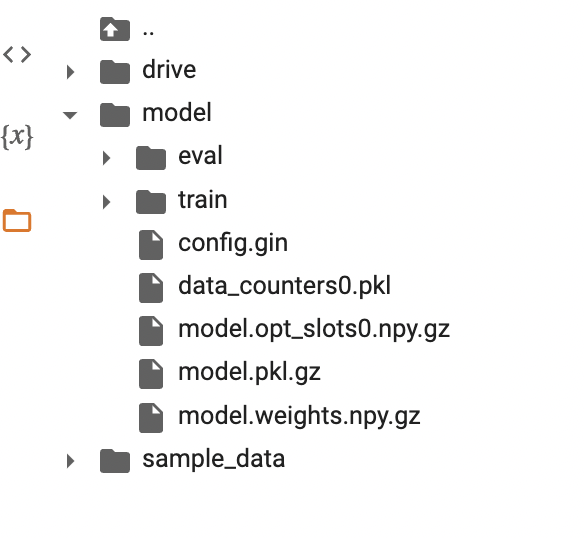

When the training is done, the model is saved to the output directory:

Let us load and evaluate model accuracy:

#initialize model and load saved weights

model = Siamese()

model.init_from_file("/content/model/model.pkl.gz")

#model evaluation helper function

#receives validation data, labels, evaluation threshold above which we consider questions to be similar

#vocabulary, data generator function, and batch size

def classify(test_Q1, test_Q2, y, threshold, model, vocab, data_generator=data_generator, batch_size=64):

#initiate accuracy variable

accuracy = 0

#launch evaluation loop for each batch

for i in range(0, len(test_Q1), batch_size):

#call data generator without shuffle and ask it to yield next batch of vectors

q1, q2 = next(data_generator(test_Q1[i:i+batch_size], test_Q2[i:i+batch_size],

batch_size, vocab["<PAD>"], shuffle=False))

#take the next batch of labels

y_test = y[i:i+batch_size]

#get two vectors from siamese model

v1, v2 = model((q1, q2))

#for every element in batch vectors

for j in range(batch_size):

#calculate cosine similarity

d = np.dot(v1[j], v2[j].T)

#and check if similarity is higher then a threshold

res = d > threshold

#increment accuracy if judgement was correct

accuracy += (y_test[j] == res)

#calculate total accuracy percentage

accuracy = accuracy / len(test_Q1)

return accuracyWe have everything we need to calculate model accuracy:

accuracy = classify(Q1_test, Q2_test, y_test, 0.7, model, vocab, batch_size=512)

print("Accuracy of the Model:", accuracy) You should see something like ‘Accuracy of the Model: 0.68544921875’.

Now we will test if the model will perform better than a pre-trained model above on subtle differences in concepts. We will create another helper function that receives questions, model, vocabulary, and data generator and returns the classification result.

def predict(question1, question2, threshold, model, vocab, data_generator=data_generator, verbose=False):

#tokenize questions

q1 = nltk.word_tokenize(question1)

q2 = nltk.word_tokenize(question2)

Q1, Q2 = [], []

#get integer representation of words in sentences

for word in q1:

Q1 += [vocab[word]]

for word in q2:

Q2 += [vocab[word]]

#create vector representations of questions

Q1, Q2 = next(data_generator([Q1], [Q2], 1, vocab["<PAD>"])

v1, v2 = model((Q1, Q2))

#calculate cosine similarity

d = fastnp.dot(v1[0], v2[0].T)

#define if similarity score is above the threshold.

res = d > threshold

if (verbose):

print("Q1 = ", question1, "\nQ2 = ", question2)

print("similarity score = ", d)

print("result = ", res)

return res Let us run our questions pairs through the evaluation:

#pair 1

question1 = "How are you?"

question2 = "Are you fine?"

example1 = predict(question1, question2, 0.8, model, vocab, verbose=True)

#pair 2

question1 = "Do you enjoy eating the dessert?"

question2 = "Do you like hiking in the desert?"

example2 = predict(question1, question2, 0.8, model, vocab, verbose=True)

#pair 3

question1 = "How are you?"

question2 = "Do you like hiking in the desert?"

example3 = predict(question1, question2, 0.8, model, vocab, verbose=True)

#pair 4

question1 = "How are you?"

question2 = "How are you today?"

example4 = predict(question1, question2, 0.8, model, vocab, verbose=True)The results should be similar to the numbers below:

question1 = "How are you?"

question2 = "Are you fine?"

similarity score = 0.9263276

result = True

question1 = "Do you enjoy eating the dessert?"

question2 = "Do you like hiking in the desert?"

similarity score = 0.7217777

result = False

question1 = "How are you?".lower().split()

question2 = "Do you like hiking in the desert?"

similarity score = 0.6647145

result = False

question1 = "How are you?"

question2 = "How are you today?"

similarity score = 0.8646995

result = TrueWe can see how the model captured the difference between visually similar “Do you enjoy eating the dessert?” and “Do you like hiking in the desert?”. As well, it was able to capture the fact that “How are you?” and “Are you fine?” are conceptually closer compared to the pair “How are you?” (in general) and “How are you today?”. The difference is subtle but often critical for angry people writing about their issues with the software.

The model size should not exceed 40 Mb, which is definitely an improvement. With quantization techniques (which I will describe in further articles), it can be as little as 10 Mb, allowing us to deploy it to mobile or an edge device.

Conclusion

In this article, we tested two possible approaches in Semantic Similarity segmentation.

The first was to use a pre-trained model. It was lightweight and quick in implementation but had some accuracy and model deployment parameters challenges.

Another was building and training own neural net tailored for a specific task. This approach definitely takes more time and effort, especially given that we’ve only scratched the surface. The model architecture will be more complicated in the production environment and data transformation and preparation pipelines.

But the second approach demonstrated better performance, and the model size was much less than in the first approach.

There is another challenge in the second approach, and the cost is higher. First, we need an appropriate dataset that usually requires time and effort. Also, training and tuning require more time and expertise.

I hope this article gave you a nice overview of possible approaches to Semantic Similarity segmentation, and you will be able to make a wise decision when the time comes.

The following articles will talk about model quantization and other fun stuff, so until next time, and may the Force be with You!